The Shift from Prompt Engineering to Agent Engineering

Agent Engineering creates AI agents capable of independent decision-making, continuous learning, and complex problem-solving across various domains.

The launch of ChatGPT in late 2022 marked a significant milestone in the advancement of AI, demonstrating the potential of large language models to engage in human-like dialogue and perform complex tasks.

However, the rapid evolution of AI capabilities has exposed the limitations of traditional approaches to AI development. As we move from simple chatbots to more complex, autonomous systems, there's a growing need for a new paradigm: Agent Engineering. This approach aims to create AI agents capable of independent decision-making, continuous learning, and complex problem-solving across various domains.

But what is “agent engineering”? Agent engineering in generative AI involves designing and optimizing autonomous agents that use advanced AI models to perform tasks, make decisions, and generate outputs based on specific objectives. This process includes model design, training, behavioral programming, and ensuring the agents operate ethically and contextually in their environments.

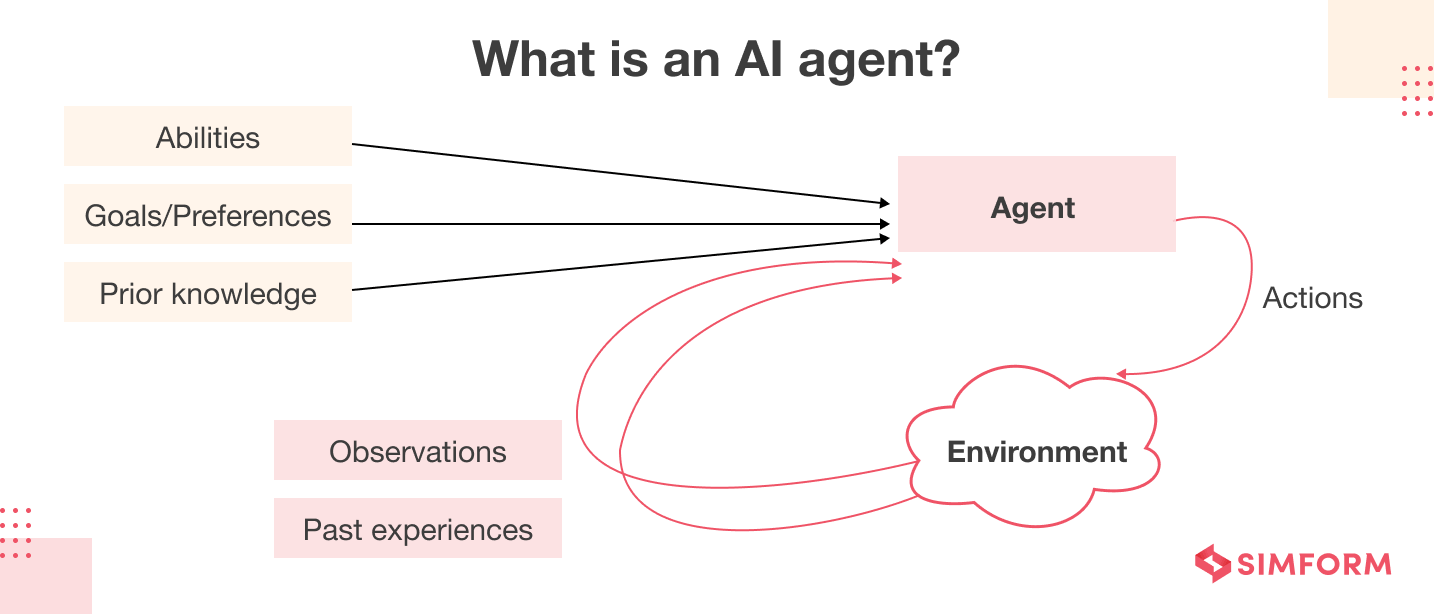

Understanding AI Agents

AI agents are software entities designed to perceive their environment, make decisions, and take actions to achieve specific goals. Unlike traditional AI systems that focus on narrow tasks, AI agents are characterized by:

Autonomy: The ability to operate independently without constant human intervention.

Adaptability: The capability to learn from experiences and adjust behavior accordingly.

Goal-oriented behavior: Pursuit of objectives through strategic planning and action.

Multi-modal interaction: The ability to process and respond to various input types (text, voice, images, etc.).

Compared to traditional AI systems, agents offer several advantages:

Flexibility: Agents can handle a wide range of tasks and adapt to new situations.

Scalability: Multiple agents can work together to solve complex problems.

Continuous improvement: Agents can learn and evolve over time, enhancing their performance.

The Limitations of Prompt Engineering

While prompt engineering has been crucial in harnessing large language models, it is limited by its reliance on trial and error, leading to inefficiencies in more complex tasks. It struggles to adapt to dynamic, real-time environments where AI systems need to make autonomous decisions and respond to changing contexts. Additionally, its domain-specific nature makes it challenging to scale AI solutions across diverse applications, highlighting the need for more robust, adaptive methodologies.

Here are some specifics:

Single-Interaction Focus: Prompt engineering primarily optimizes for individual exchanges between the user and the AI system. This approach works well for straightforward, one-off tasks contained within one conversation but falls short when dealing with complex, multi-step processes or long-term interactions. The inability to maintain context over extended periods hampers the AI's effectiveness in scenarios that require ongoing dialogue or the completion of intricate workflows. For instance, a prompt-engineered system might excel at answering isolated questions but struggle to assist with a multi-day project that involves iterative refinement, different chatbot “profiles” and contextual understanding.

Lack of Autonomy and Decision-Making Capabilities: Prompt-based systems are fundamentally reliant on human-provided instructions, experienced users, and understanding of the subtleties of prompt engineering, which limit their ability to operate independently. This dependency becomes a significant bottleneck when dealing with tasks that require autonomous decision-making or strategic planning. For example, while a prompt-engineered AI can follow specific instructions to analyze data, it may falter when asked to independently identify trends and make recommendations based on those insights. Furthermore, these systems often lack the capability to prioritize tasks effectively or manage resources efficiently, as these functions typically require a level of judgment and contextual understanding beyond what prompt engineering can provide.

Scalability Challenges: As the complexity of tasks increases, the limitations of prompt engineering become more pronounced, particularly in terms of scalability. Designing effective prompts for intricate scenarios becomes an increasingly time-consuming and challenging process. This issue is compounded when attempting to maintain consistency across a wide range of use cases, each potentially requiring its own set of carefully crafted prompts. Moreover, prompt-based systems often struggle with seamlessly integrating external data sources or APIs, a crucial capability for many real-world applications. This integration challenge limits the system's ability to access and utilize up-to-date information or perform actions beyond its pre-programmed capabilities.

Prompt engineering’s strength lies in its simplicity, but this same simplicity limits its effectiveness in solving complex problems. Chatbots, often powered by prompt-based systems, are designed for streamlined, low-bandwidth interactions, which are usually insufficient for addressing more sophisticated or nuanced challenges.

These limitations underscore the need for a more comprehensive and flexible approach to AI development. As we move towards creating AI systems capable of autonomous operation, continuous learning, and complex decision-making, it becomes clear that a new paradigm is necessary.

The Agent Engineering Framework

The Agent Engineering Framework offers a structured alternative to prompt engineering, guiding the design and implementation of AI agents that are not only capable of performing specific tasks but are also adaptive, scalable, and aligned with overarching business or technical objectives. It consists of five key components:

1. Purpose and Goals

At the foundation of agent design is a clear definition of the agent's purpose and goals. This involves:

Identifying the specific problems or tasks the agent is meant to address.

Defining measurable objectives that align with the agent's intended function.

Establishing the scope of the agent's operations and decision-making authority.

2. Actions and Capabilities

This component focuses on defining what the agent can do and how it can interact with its environment:

Mapping out the range of actions the agent can take.

Specifying the types of data the agent can process and generate.

Determining the agent's ability to interact with external systems or APIs.

3. Proficiency Benchmarks

Establishing clear performance metrics is crucial for evaluating and improving agent functionality:

Defining specific, measurable criteria for each capability.

Setting performance thresholds that indicate successful operation.

Creating test scenarios that challenge the agent's abilities across various contexts.

4. Technology Integration

This aspect involves selecting and implementing the technologies that will power the agent:

Choosing appropriate AI models (e.g., large language models, specialized neural networks.)

Implementing data retrieval and storage systems.

Integrating external APIs and services.

Developing mechanisms for continuous learning and model updating.

5. Orchestration and Anatomy

The final component addresses how the various parts of the agent work together and how multiple agents might collaborate:

Designing the agent's internal architecture, including decision-making processes.

Establishing protocols for inter-agent communication and collaboration.

Implementing mechanisms for task delegation and resource management.

Ensuring scalability to handle increasing complexity and workload.

By following this framework, developers can create AI agents that are more capable, adaptable, and autonomous than traditional AI systems. The Agent Engineering approach enables the creation of AI solutions that can handle complex, long-term tasks, learn from their experiences, and operate with a degree of independence that was previously unattainable.

This holistic approach to AI development opens up new possibilities across various industries, from personalized healthcare assistants to advanced financial analysis systems.

If you are intererested in learning more and going deeper, make sure to check out these valuable sources:

Key Technologies Enabling Agent Engineering

The development of sophisticated AI agents relies on a combination of cutting-edge technologies. These technologies work in concert to provide the capabilities necessary for autonomous, adaptable, and intelligent agent behavior. Let's examine the key technologies that are driving the field of Agent Engineering forward.

Large Language Models (LLMs)

Large Language Models form the foundation of many modern AI agents, providing them with broad knowledge and powerful language understanding and generation capabilities.

Core functionality: LLMs are trained on vast amounts of text data, allowing them to understand and generate human-like text across a wide range of topics and styles.

Contextual understanding: Advanced LLMs like GPT-4 and Claude 3 can grasp complex context, enabling more nuanced and relevant responses in various scenarios.

Zero-shot learning: LLMs can often perform tasks they weren't explicitly trained for, providing agents with flexibility in handling new situations.

Limitations: While powerful, LLMs can sometimes produce inaccurate or inconsistent information, necessitating additional technologies to enhance their reliability.

Retrieval-Augmented Generation (RAG)

RAG systems address some of the limitations of pure LLMs by combining them with external knowledge retrieval mechanisms.

Information accuracy: By retrieving relevant information from curated databases or documents, RAG systems can provide more accurate and up-to-date responses.

Domain specialization: RAG allows agents to access specialized knowledge bases, making them more effective in specific domains or industries.

Dynamic knowledge: Unlike static LLMs, RAG systems can incorporate new information without requiring full model retraining.

Implementation challenges: Effective RAG systems require careful curation of knowledge bases and efficient retrieval mechanisms to maintain performance.

RAG is an AI framework that combines large language models with external knowledge retrieval systems. It operates by first receiving a query or prompt, then retrieving relevant information from external knowledge sources. This retrieved information, along with the original query, is fed into a large language model, which generates a response based on this combined input. RAG enhances the accuracy and reliability of AI responses by grounding them in up-to-date, externally verified information. This approach allows AI systems to leverage both the broad capabilities of language models and the precision of curated knowledge bases, resulting in more informed and context-aware outputs.

For more on RAGs see:

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks

Retrieval Augmented Generation: A Primer for Software Developers

Function Calling and API Integration

Function calling and API integration enable AI agents to interact with external systems and perform actions beyond text generation.

Expanded capabilities: Agents can access real-time data, perform calculations, or trigger actions in other systems.

Task automation: By integrating with various APIs, agents can automate complex workflows involving multiple steps and systems.

Enhanced accuracy: Function calling allows agents to retrieve precise information (e.g., current stock prices, weather data) rather than relying solely on their trained knowledge.

Scalability: As new APIs become available, agents can be updated to incorporate new functionalities without major architectural changes.

Fine-tuning and Specialized Models

While general-purpose LLMs provide a strong foundation, fine-tuning and specialized models allow for more targeted and efficient agent behaviors.

Task-specific optimization: Fine-tuning allows agents to perform better on specific tasks or within particular domains.

Efficiency improvements: Specialized models can be smaller and faster than general-purpose LLMs, making them more suitable for resource-constrained environments. They also lend themselves to “edge AI” applications.

Behavioral alignment: Fine-tuning can help align agent behavior with specific ethical guidelines or company policies.

Continuous learning: Techniques like online fine-tuning enable agents to improve their performance over time based on interactions and feedback.

The integration of these technologies creates a powerful toolkit for Agent Engineering. LLMs provide a strong foundation of knowledge and language understanding. RAGs enhance accuracy and allows for domain specialization. Function calling and API integration expand the agent's capabilities beyond language processing. Finally, fine-tuning and specialized models enable optimization for specific tasks and continuous improvement.

By leveraging these technologies in combination, developers can create AI agents that are not only knowledgeable and articulate but also capable of accurate information retrieval, real-world interactions, and ongoing adaptation to new challenges. This technological foundation is what enables the shift from simple prompt-based systems to truly autonomous and intelligent AI agents.

The Bottom Line on Agent Engineering

The shift from prompt engineering to agent engineering marks a significant leap in AI development, addressing the limitations of single-interaction systems and paving the way for more autonomous, adaptable, and capable AI solutions. By embracing the principles of agent engineering and leveraging technologies like advanced LLMs, RAG systems, and API integration, businesses can create AI agents that operate with greater independence, learn continuously, and tackle complex, multi-faceted tasks.

As this field evolves, it promises to unlock new possibilities across industries, from healthcare to finance, manufacturing to customer service. The future of AI lies not just in responding to prompts, but in proactively engaging with the world, making decisions, and solving problems. For enterprises looking to stay at the forefront of technological innovation, investing in agent engineering capabilities is no longer optional—it's imperative for remaining competitive in an increasingly AI-driven world.

Keep a lookout for the next edition of AI Uncovered, which will cover Agent use cases for your enterprise!

Follow on Twitter, LinkedIn, and Instagram for more AI-related content.