OpenAI’s o3 Model: A Major Advancement in AI Computing Brings New Cost Considerations

OpenAI's o3 model introduced a new approach to AI problem-solving.

In the closing days of 2024, OpenAI unveiled o3, a groundbreaking AI model that takes us a step further in transforming how these systems process information. Unlike its predecessors in the GPT series, o3 introduces an innovative approach to problem-solving that more closely mirrors human cognitive processes, while raising important questions about the economic viability of advanced AI systems.

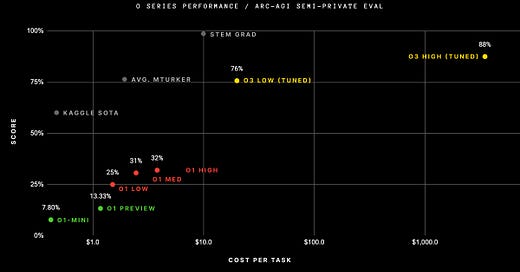

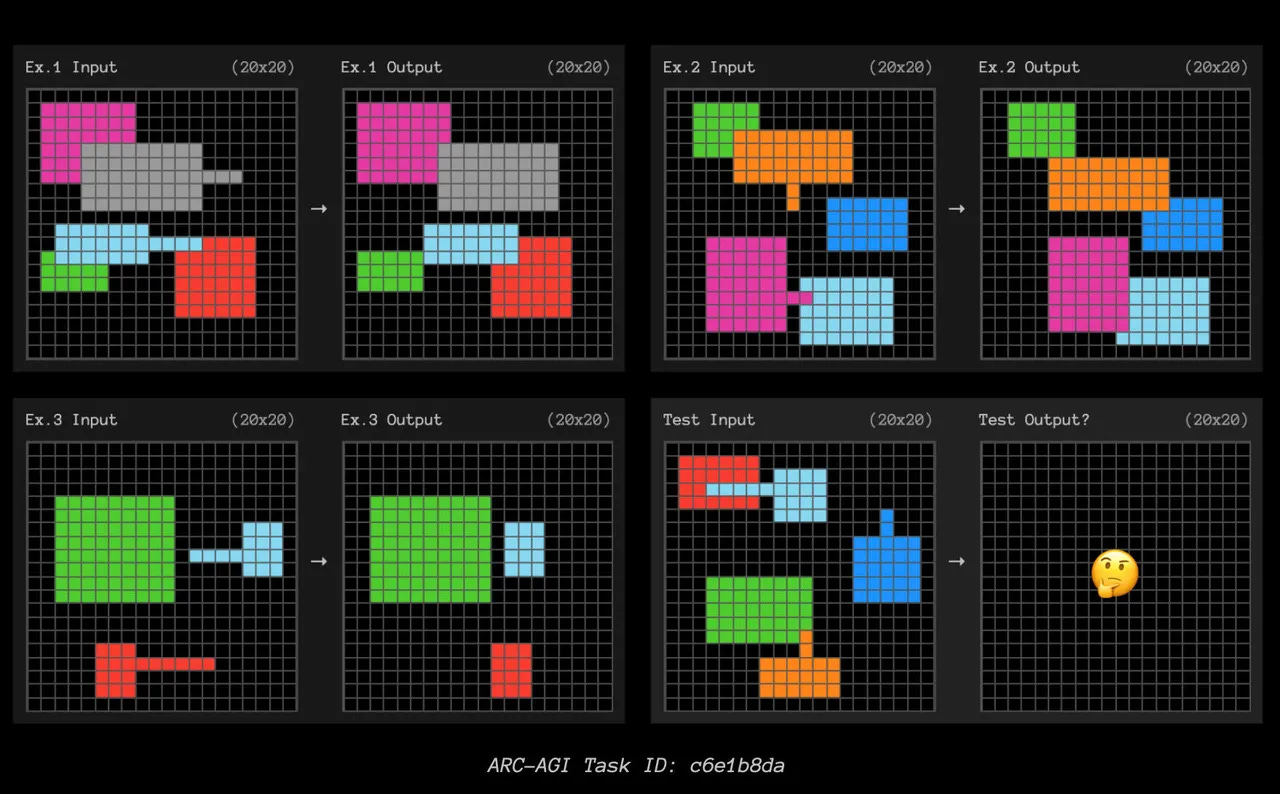

The model's release marks a clear shift from traditional AI architectures, as noted by prominent AI researcher François Chollet: "It is completely obvious from the latency/cost characteristics of the model that it is doing something completely different from the GPT series." This fundamental change in approach has produced remarkable results, with o3 achieving exceptional performance metrics that include an 87.5% accuracy rate on the ARC-AGI benchmark—nearly triple the performance of its predecessor.

A Few Words on Model Naming

OpenAI’s model naming conventions have evolved to reflect advancements in capabilities and design focus. The introduction of the “o” series, starting with o1, marked a shift towards models emphasizing advanced reasoning and problem-solving skills. This series diverged from the previous “GPT” nomenclature to highlight its distinct approach. Subsequent iterations, such as o3, continued this progression, introducing enhanced reasoning capabilities and larger context windows.

In contrast, the GPT-4o model, where “o” stands for “omni,” was developed earlier and focused on multimodal functionalities, enabling it to process and generate text, images, and audio. Despite the numerical sequencing, GPT-4o predates the o3 model. The numbering reflects different developmental paths:

GPT-4o: Part of the GPT-4 lineage, emphasizing multimodal capabilities.

o3: A continuation of the “o” series, focusing on reasoning and analytical tasks.

This distinction underscores OpenAI’s dual focus on expanding both the versatility (through multimodal processing) and the cognitive depth (through enhanced reasoning) of its AI models.

Technical Innovation: Extended Computation Time

At the core of o3's capabilities lies its implementation of "test-time compute," a sophisticated approach that changes how the AI system engages with problem-solving tasks. Unlike traditional models that operate on a single-pass basis, o3 can spend extended periods—potentially hours—exploring various solution pathways, effectively matching human-like thinking processes.

This technical innovation operates in two distinct modes: a high-compute configuration that uses extensive computational resources for maximum performance, and a more practical low-compute variant that balances capability with efficiency. Even in its more modest low-compute mode, o3 shows remarkable capabilities, achieving a 76% accuracy rate on the ARC-AGI benchmark—a performance level that exceeds average human benchmarks.

The model's benchmark performances demonstrate its enhanced capabilities:

87.5% accuracy on the ARC-AGI benchmark in high-compute mode

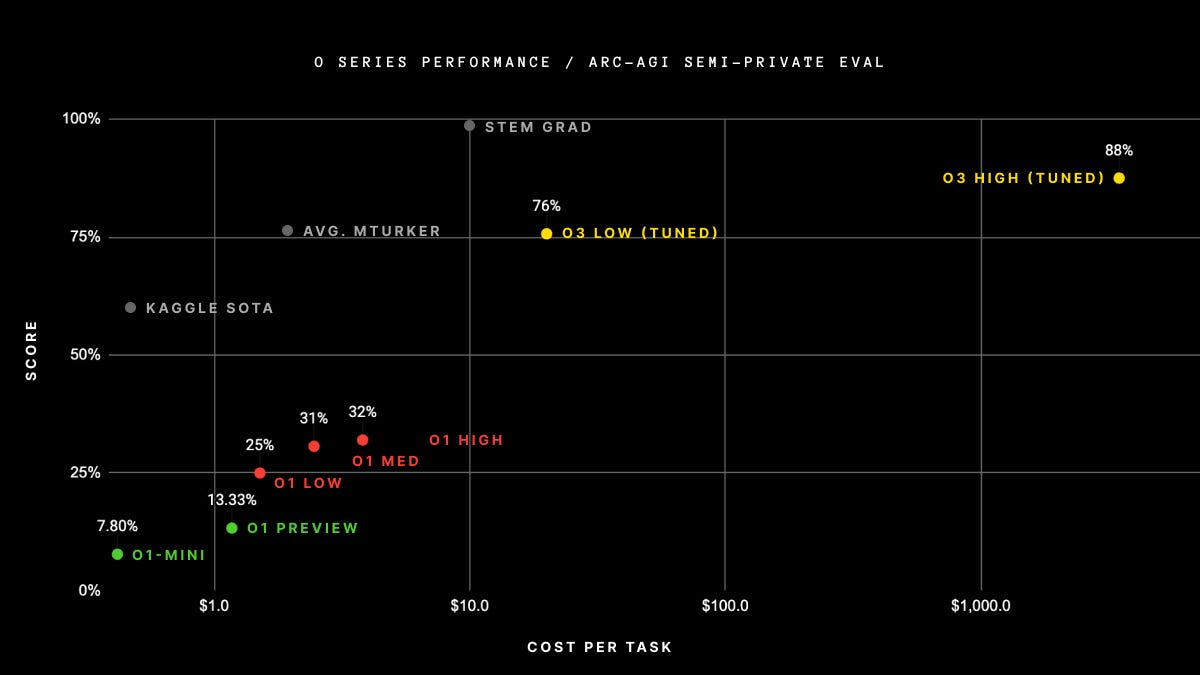

25.2% accuracy on the Frontier Math benchmark, solving research-level mathematical problems

76% accuracy on ARC-AGI in low-compute mode, setting a new baseline for efficient AI performance

These metrics represent not just improvements but a significant advance in AI problem-solving capabilities. However, this enhanced performance requires substantial computational resources that challenge current assumptions about AI system scaling and deployment.

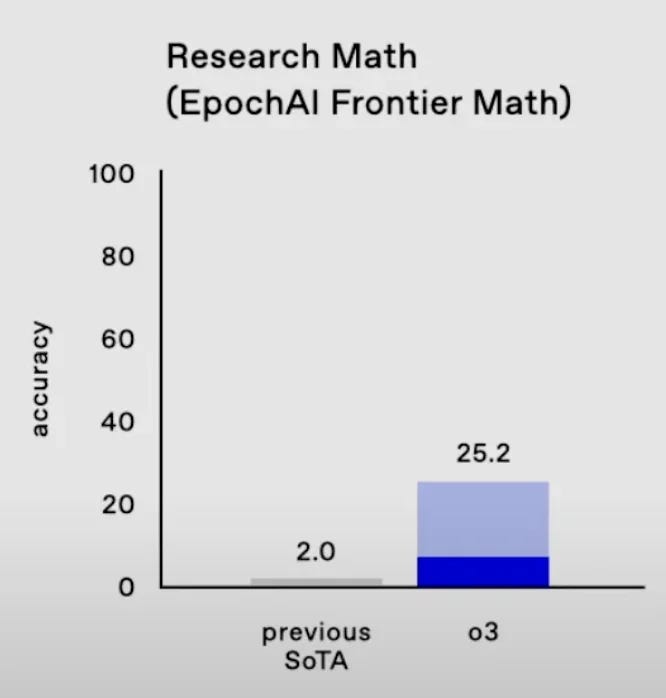

The ARC-AGI benchmark is derived from the Abstract Reasoning Corpus, designed to evaluate an AI system’s capacity to handle unfamiliar tasks and exhibit adaptable intelligence. It consists of visual challenges that necessitate grasping fundamental concepts like objects, boundaries, and spatial relationships.

The Cost Equation: A New Paradigm in Computational Economics

The operational costs of o3 are a significant departure from existing AI cost structures, introducing a new dimension to the economics of artificial intelligence deployment. In high-compute mode, running a single task can require up to $350,000 in computational resources, marking an unprecedented scale of resource intensity for AI operations. This cost structure stems from o3's extensive use of computational power during its extended problem-solving processes.

The model's dual operational modes present distinct economic considerations:

High-Compute Mode:

Cost per task reaches up to $350,000

Computational requirements exceed previous models by orders of magnitude

Resource demands raise questions about practical application scenarios

Low-Compute Mode:

Operational costs average $20 per task

170 times more efficient than high-compute mode

Still exceeds the operational costs of current AI models

The economic trajectory of computational costs suggests a likely pattern of rapid cost reduction as technology advances. Historical trends in computing efficiency, combined with ongoing developments in hardware optimization and scaling techniques, indicate that today's prohibitive costs could decrease by orders of magnitude in relatively short timeframes. This projected cost reduction curve presents a critical strategic consideration: while current pricing makes o3's high-compute capabilities accessible only to well-resourced organizations, the technology's eventual commoditization could reshape the competitive AI landscape.

This cost evolution pattern raises fundamental questions about market dynamics and economic preparation. The key strategic consideration shifts from immediate affordability to long-term planning for a future where such computational capabilities become widely accessible. Organizations must consider not just current cost barriers but the implications of widespread access to these advanced computational capabilities.

Performance Analysis: Is This AGI?

O3's performance metrics reveal both groundbreaking achievements and notable limitations, painting a complex picture of its technological capabilities. The model's success on established benchmarks demonstrates significant advances in problem-solving abilities, while also highlighting areas where further development remains necessary.

The AI research community has noted that while o3's achievements mark significant progress, they don't necessarily indicate proximity to Artificial General Intelligence (AGI). As Chollet explains, "You'll know AGI is here when the exercise of creating tasks that are easy for regular humans but hard for AI becomes simply impossible." This benchmark remains unmet, suggesting both the model's achievements and its distance from human-like general intelligence.

The performance characteristics of o3 highlight a critical insight into AI development: raw computational power and sophisticated problem-solving abilities don't automatically translate into human-like intelligence. Instead, they represent a different approach to intelligence, one that may complement rather than replace human cognitive capabilities.

Industry and Societal Implications

The emergence of o3's capabilities signals a shift in how organizations and society might approach complex problem-solving tasks with future AI systems. This technological advancement raises fundamental questions about the future of work and the distribution of computational resources across different sectors of the economy.

The employment implications extend beyond simple automation concerns. The key question, raised repeatedly in technical discussions, centers on the economic calculus of human versus computational problem-solving. When organizations can apply massive computational resources to complex tasks, it reshapes traditional assumptions about workforce development and skill specialization. This dynamic suggests a shift toward roles that emphasize uniquely human capabilities—particularly in areas where o3 shows limitations, such as intuitive reasoning and cross-contextual understanding.

Accessibility presents another critical consideration. The stark divide between high-compute and low-compute capabilities creates a two-tier system of AI utilization. Organizations must navigate this divide while considering:

Resource allocation strategies

Competitive advantages in computational capabilities

Strategic positioning in an evolving technological environment

The development trajectory of o3 points toward an AI ecosystem where computational intensity becomes a key differentiator in problem-solving capabilities. This shift requires organizations to develop new frameworks for evaluating the trade-offs between computational costs and problem-solving effectiveness.

As the technology matures, the key challenge lies not just in advancing computational capabilities, but in developing frameworks that effectively integrate these capabilities into existing organizational structures. The o3 model represents not just a technical achievement, but a preview of the complex decisions organizations will face as AI capabilities continue to advance.

This transformation demands careful consideration of both technical capabilities and broader societal implications, suggesting a future where success depends on balancing computational power with practical implementation strategies.

Keep a lookout for the next edition of AI Uncovered!

Follow our social channels for more AI-related content: LinkedIn; Twitter (X); Bluesky; Threads; and Instagram.