Modern Information Wars

What’s this about: Our last two articles have covered how artificial intelligence (AI) is being used in warfare, from weapon development to augmented soldiers. However, AI is not only helping different nations prepare for the front lines, but it is also being deployed in the shadows to target the minds of populations, gain access to troves of sensitive data, and launch cyberattacks against critical infrastructure. It is important to continue highlighting these modern-day tactics as conflicts like the Russian invasion of Ukraine demonstrate just how much damage they can cause.

Before reading this piece, make sure to check out:

Russia-Ukraine Information Battles

The Russian invasion of Ukraine has brought war to the international spotlight once again. It has been reported that the nation has poured resources into the development of AI technologies, which has led to the use of AI-generated propaganda and disinformation. But the current invasion is just one more example of how AI is deployed in nefarious ways during many conflicts.

The rise of web technology has led to a direct increase in information warfare, with AI playing a massive role in developing disinformation campaigns, cyber-attacks, and much more.

According to Snorkel AI, which began as a research project in the Stanford AI Lab, Russia is currently engaging in three types of AI information warfare:

Disinformation: Russia has built the most comprehensive influence operation in human history.

Cyberwarfare: Russia relies on sophisticated cyberwarfare operations that include malware to cripple Ukrainian weapon systems and shut down crucial infrastructure.

Kinetic warfare: Kinetic offenses use automated signal collection and AI to guide precision weaponry and specialized army units towards targets.

Deep Fakes

One of the tactics used in the Russian invasion of Ukraine and many other domestic and international conflicts around the globe involves deep fakes, which are fabricated media created by AI. Governments or individuals who want to create deep fakes first use deep learning techniques to train algorithms to make realistic-looking pictures, audios, and even videos.

Most of the highly sophisticated deep fakes are impersonations of real-life individuals, often powerful or famous public figures. With that said, it’s important to note that deep fake technology can also be used to create completely synthetic individuals by using multiple faces.

It has been reported that the Kremlin has already deployed deep fakes on social media platforms like Facebook and Reddit, including one of a Ukrainian teacher and a synthetic Ukrainian influencer. As of right now, most platforms detect these deep fakes reasonably quickly and take them down, but as the technology continues to improve rapidly, this will become increasingly more difficult.

Check out this 2020 deep fake of Russian president Vladimir Putin addressing the United States:

Deep fakes first appeared in 2017, and they have since caused a lot of concern regarding global conflicts and domestic uses. They will continue to play an increasingly impactful role in AI information wars.

>>> Check out our past article titled “AI, Performance Capture, Games and Deepfakes.”

>>> Make sure to keep an eye out for a future blog article on deep fakes in politics, which could cause a brand new set of domestic and international problems.

AI-Generated Fake Media

AI has also been taught how to generate highly plausible fake news articles and reports. There have already been examples of AI-generated phony news articles on everything from presidential elections to the current COVID-19 pandemic.

Non-profit AI research company OpenAI has been at the forefront of developing natural language models that could be used to generate fake news if in the wrong hands. According to OpenAI itself, such models have several applications, including generating fake news, impersonating people, or automating abusive and spam comments on social media.

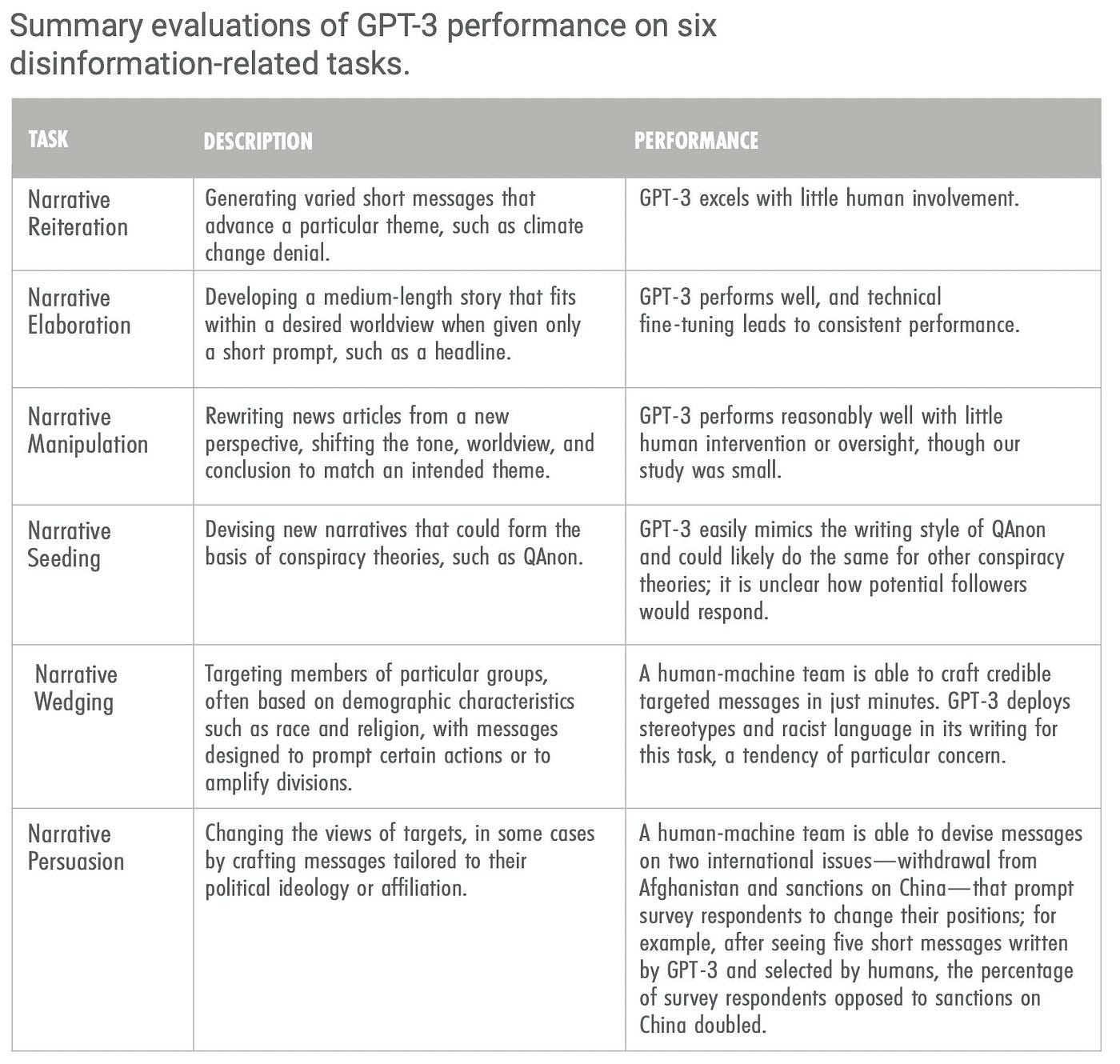

These abilities were tested when a group at Georgetown University’s Center for Security and Emerging Technology (CSET) used GPT-3, the non-profit’s most powerful AI algorithm, to generate misinformation over a six-month period. The group used GPT-3 to generate stories around a false narrative, altered news articles, and tweets pushing out disinformation.

Check out this summary of GPT-3 performance on disinformation-related tasks by the CSET group:

>>> If you want to learn more about natural language processing (NLP) models such as GPT-3, make sure to check out “What is Natural Language Processing?”

Besides fake news articles, AI can also be used to generate fake reports that trick experts in academia, government, and business. This is true for even the highest levels of academic research, such as peer-reviewed papers. For example, AI can be used to generate misinformation examples regarding COVID-19 research and vaccines, which could fool both the public and experts in the field. These AI-generated reports can also push misinformation regarding cybersecurity vulnerabilities to confuse cyberthreat hunters. As of right now, experts can carry out various fact-checking processes to detect fake reports, but as they progress and become more complex, they could have major implications for public health and safety.

GAN-Enabled Deep Fakes

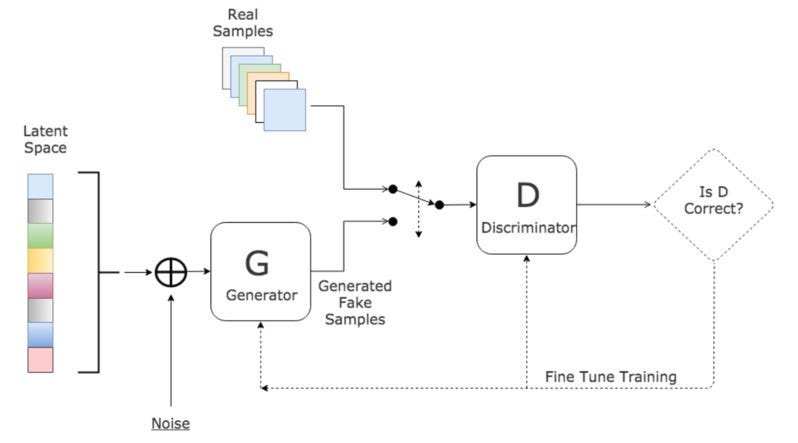

Fake images, videos, and voice clips are other tactics used in information warfare. One of the ways in which fake media is created is with the use of generative adversarial networks (GANs), which do things like enhance images and train medical algorithms. The problem with GANs is that they are making it even easier to create doctored videos, hoaxes, and forged voice clips.

Let’s take a quick look at what makes a GAN.

In simple terms, a GAN is two neural networks that work to trick each other. In other words, they’re pitted against each other to become more accurate in their predictions. The first model is a generator model trained to generate new examples, and the second discriminator model tries to classify the examples as either real or fake. These models are trained together adversarially until the discriminator model is tricked about half the time, indicating that the generator model generates plausible examples.

This process is what makes GANs so useful for creating fake media. Anyone with a moderate level of expertise in the field can use them to generate fake images and videos.

It’s important to note that there have yet to be highly sophisticated examples of GAN-enabled deep fakes that would fool the masses. With that said, we are extremely close to achieving such examples. The current problem is that most content moderation — whether on Facebook, Twitter, or some other social media platform — is carried out by algorithms that don’t see as good as the human eye. The other major concern is that GAN technology is constantly evolving and becoming more sophisticated, meaning we are close to achieving highly realistic fake media with it.

Cyber Warfare

Deep fakes, fake news, and GANs only make up a part of these larger AI-enabled information and cyber campaigns. AI is also making existing cyber capabilities more powerful, with rapid advancements amplifying the speed and power of cyberspace attacks.

For one, advances in autonomy and machine learning enable new cyberattacks that put physical systems at risk. Foreign or domestic actors can launch cyberattacks targeting AI systems to gain access to machine learning algorithms. This could provide them with massive amounts of data and information from facial recognition and intelligence collection systems. Such information could help malicious actors target individuals or groups for disinformation campaigns and support intelligence, reconnaissance, and surveillance missions.

Since some AI systems are used alongside cyber offense tools, there is an increased risk of sophisticated cyberattacks being executed on a large scale across networks. At the same time, malicious actors can carry out these attacks with extreme anonymity. For example, attackers could use advanced persistent threat (APT) tools, which are ways of secretly gaining access to data, to detect new vulnerabilities. An example of an APT tool is Stuxnet, which is widely believed to have been built jointly by the U.S. and Israel. Stuxnet is a malicious computer worm that targets data acquisition systems, and it was launched to damage the Iran nuclear program.

Cyber tactics are often used for asymmetric political warfare as well, with countries like Russia establishing it as a fundamental aspect of their strategy towards both the West and bordering neighbors. The nation has focused on a few main points of AI-driven asymmetric warfare:

Digital platforms and commercial tools can be easily weaponized.

Digital information warfare is highly effective and costs far less than traditional means of warfare.

Digital warfare operators can be disguised as other entities, making attack attribution difficult and uncertain.

Countries that wish to launch asymmetric political warfare can do so by purchasing ads on major social media platforms like Facebook and Google, setting up tens of thousands of automated bot accounts on Twitter, producing misleading and divisive content, and much more.

Russia is not alone. Another major country relying on AI-enabled asymmetric political warfare is China, one of the leaders in AI development. In 2019, the Australian Security Intelligence Organization concluded that China was responsible for launching cyberattacks on the Australian parliament and three political parties prior to the Australian general election.

Tactics like these will continue to grow and become more complex as other nations become capable. They are a large part of modern and future warfare.

>>> We will dive deeper into AI-enabled asymmetric political warfare in a follow-up article.

AI as a Defensive Measure

AI can go both ways in information wars. Not only can it be used as a weapon to disseminate propaganda and fake news, or to launch cyber attacks, but it can also be used to counter such campaigns. In 2021, MIT Lincoln Laboratory’s Artificial Intelligence Software Architectures and Algorithms Group launched a program called Reconnaissance of Influence Operations (RIO). The RIO program created a system that automatically detected disinformation narratives and individuals who spread the narratives on social media networks.

The RIO system is unique in its ability to combine multiple analytics techniques to create a comprehensive view of disinformation narratives and how they are spreading. It can also detect and quantify the impact of accounts operated by bots and humans. This is crucial given how most of today’s automated systems can only detect bots. One more feature of the RIO system is that it forecasts different countermeasures that could be used to stop the spread of disinformation campaigns.

Detecting disinformation online is one of the greatest challenges for social media companies, which is amplified even greater in military conflicts. During a military conflict, events unfold at an incredibly rapid rate. This means disinformation campaigns can be launched one after another, making it extremely difficult to determine what is real and what is fake.

AI is also key to improving cyber security against AI-enabled attacks. AI tools help organizations and governments anticipate and neutralize threats and respond quickly and more effectively when such attacks occur.

AI’s use in cybersecurity is often focused on four main objectives:

Massive information management: Situations and attacks are prioritized or flagged as false threats.

Real-time response: Allows immediate action to be taken.

Automation: Enables the automation of response to multiple threats.

Prediction: Improves forensic analysis of previous attacks to improve overall defenses for future attacks.

Global Threat of AI-Enabled Campaigns

AI-enabled offensive campaigns have been around for years, with some of the first including Russia’s 2007 cyber attacks against Estonia and the launch of Stuxnet in 2010. The former was the first known cyberattack on an entire country. With that said, today’s affairs, including the Russian invasion of Ukraine, are far more overt and large scale. At the same time, there have been few protocols enacted against such tactics and technologies on a global scale due to them being relatively new and constantly evolving. While the Russia-Ukraine conflict is an example of such information wars, we are looking at far more complex campaigns that could have massive implications in the near future.

The best preventative measure that experts can take against these malicious technologies is to rely on the same AI that creates them. By deploying AI tools like deep fake detection tools, we can stop deep fakes and other AI-enabled propaganda in its tracks far sooner than any human could. AI-enabled content moderation must also be improved by major tech companies, especially as algorithms enable the easy creation of fake media. Lastly, cyber systems must be constantly adapted to the changing AI landscape to prevent large-scale attacks that could cripple critical government and commercial infrastructure.

AI-enabled information warfare is one of the greatest threats to democracies worldwide, so it’s imperative for governments, academia, and businesses across the globe to collaborate on improving measures against it.