How Neurosymbolic AI Rewrites the Reliability Equation

Neurosymbolic AI combines neural networks' pattern recognition with symbolic AI's formal logic, addressing reliability issues in AI systems.

The same neural networks that power breakthrough capabilities in language understanding and pattern recognition can be unreliable when precision matters most. A system that can write poetry indistinguishable from human creation will sometimes confidently fabricate financial regulations that never existed.

Some argue that this is an architectural limitation baked into how neural networks process information. Pure deep learning systems excel at statistical pattern matching but lack the formal reasoning structures that ensure logical consistency.

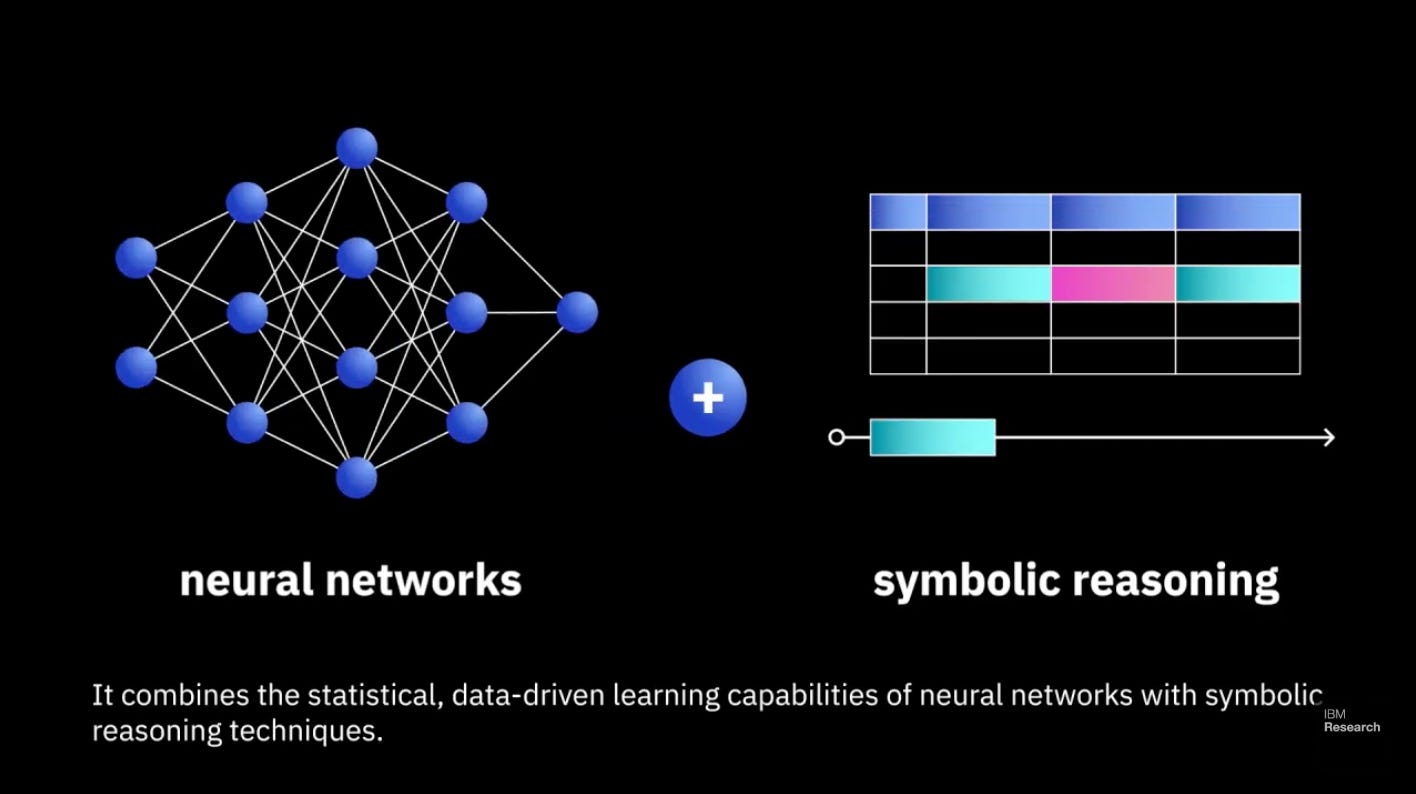

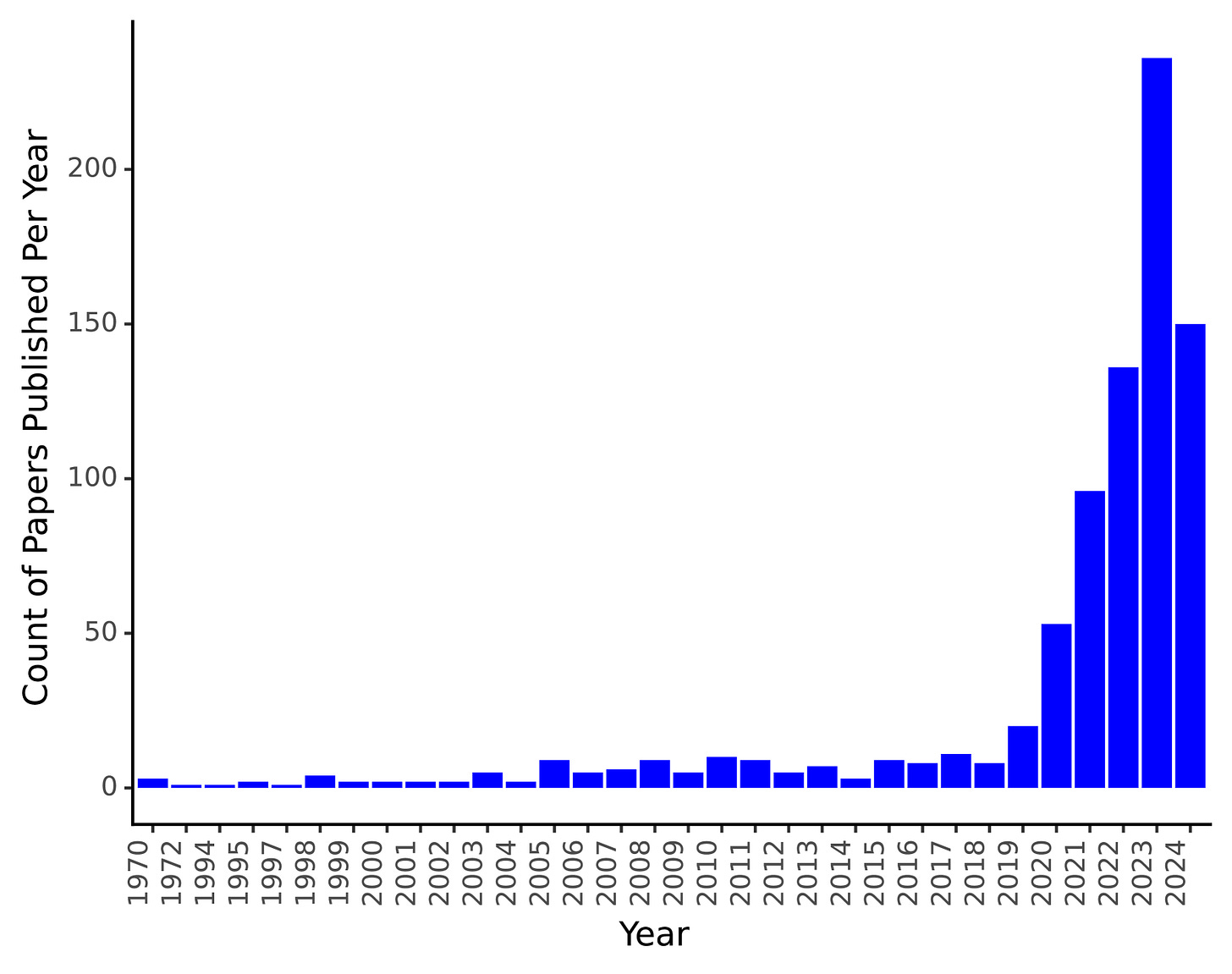

Enter neurosymbolic AI, a hybrid architecture that's moving from academic theory to production systems across industries like e-commerce, manufacturing, and robotics. By fusing neural networks' pattern recognition with symbolic AI's formal logic, these systems promise something that seemed contradictory: AI that's both creative and correct.

The Architecture of Accountability

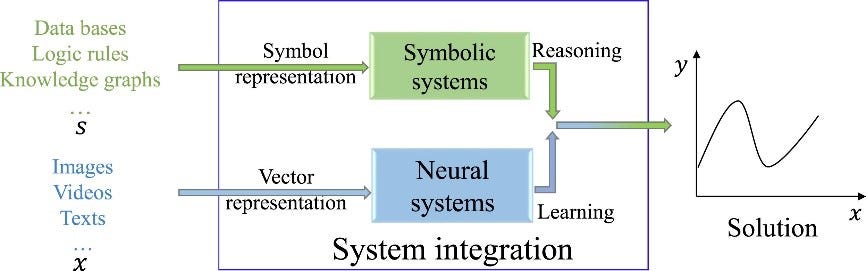

The transition from pure neural to neurosymbolic architectures reflects a deeper understanding of intelligence itself. Where traditional deep learning treats reasoning as an emergent property of massive parameter spaces, neurosymbolic AI explicitly separates learning from logical inference.

At its core, neurosymbolic AI operates through what researchers call the "neurosymbolic cycle" —a continuous feedback loop between neural and symbolic components. Neural networks extract patterns from raw data: customer behaviors, sensor readings, visual inputs. These patterns then feed into symbolic reasoning modules that apply formal rules, constraints, and domain knowledge to ensure outputs remain grounded in verifiable logic.

Consider a manufacturing quality control system. The neural component learns to spot defects in product images, but its patterns must pass through symbolic rules encoding safety standards and regulations. The system can't hallucinate a safety certification that doesn't exist—the symbolic layer acts as a logical gatekeeper.

This dual architecture addresses what recent systematic reviews identify as the meta-cognition challenge: the ability for AI systems to monitor, evaluate, and correct their own reasoning processes. When a neural network generates a potential output, the symbolic layer acts as a logical auditor, checking consistency against encoded knowledge before allowing the output to proceed.

The Knowledge Integration Problem

The technical elegance of neurosymbolic AI masks significant implementation challenges. Research from KU Leuven and other institutions point out that it’s hard to get the two sides of the system to work together: neural networks think in patterns and probabilities, while symbolic systems think in rules and logic. Bridging those two ways of ‘thinking’ requires complex translation methods, and there isn’t yet a standard way to do it.

Different frameworks handle this translation problem in wildly different ways—some embedding constraints directly into training, others keeping neural and symbolic modules separate. Without standards, every implementation becomes custom engineering work, severely limiting scalability.

The Economics of Explainability

The business case for neurosymbolic AI transcends technical performance metrics. In regulated industries, explainability isn't a nice-to-have feature—it's a compliance requirement with direct financial implications.

Financial institutions face a stark reality: they must explain every credit decision to regulators. Pure neural networks—statistical black boxes—fail this test entirely, forcing banks to either sideline AI or risk penalties.

Neurosymbolic systems flip the script. By encoding lending regulations directly into the symbolic layer, they generate decisions with built-in audit trails—every output traces back through explicit logical rules. According to EDPS technology monitoring, this makes neurosymbolic AI potentially the only viable architecture for certain regulated applications.

The Trust Multiplier Effect

Beyond regulatory compliance, explainability drives adoption rates. When users understand how AI reaches conclusions, they're more likely to accept its recommendations.

This trust multiplier shows clearly in e-commerce. Where traditional engines suggest products through opaque similarity scores, neurosymbolic systems explain their logic: "This matches your preference for sustainable materials (symbolic rule) and aligns with similar customers' patterns (neural learning)."

The deeper advantage is structural resistance to hallucination. Rather than throwing scale at the problem like traditional approaches, neurosymbolic architectures enforce logical consistency through symbolic constraints. The symbolic layer becomes a truthfulness firewall—a medical system can't recommend treatments violating protocols, a financial advisor can't suggest investments breaching regulations. No statistical likelihood can override these formal rules.

Industry Transformation Vectors

The adoption patterns reveal a clear hierarchy: industries where trust is existential lead, while those optimizing for engagement follow cautiously.

Manufacturing hits the sweet spot—predictive maintenance that spots invisible anomalies while never violating safety protocols.

Healthcare shows the stakes most clearly. A diagnostic AI suggesting a treatment that interacts fatally with a patient's medications isn't just wrong—it's lethal. Neurosymbolic systems encode drug interaction databases and treatment protocols as inviolable rules. The neural layer spots patterns; the symbolic layer prevents harm.

Robotics might be the most natural fit, with the architecture mirroring the problem itself: perception (neural) plus planning (symbolic). Warehouse robots navigate dynamically while respecting strict safety zones.

E-commerce faces "constrained creativity." Recommendation engines must balance what customers want with what businesses can actually deliver—a deceptively simple requirement that pure neural systems routinely violate. The neural layer learns preferences; the symbolic layer enforces inventory reality and business rules.

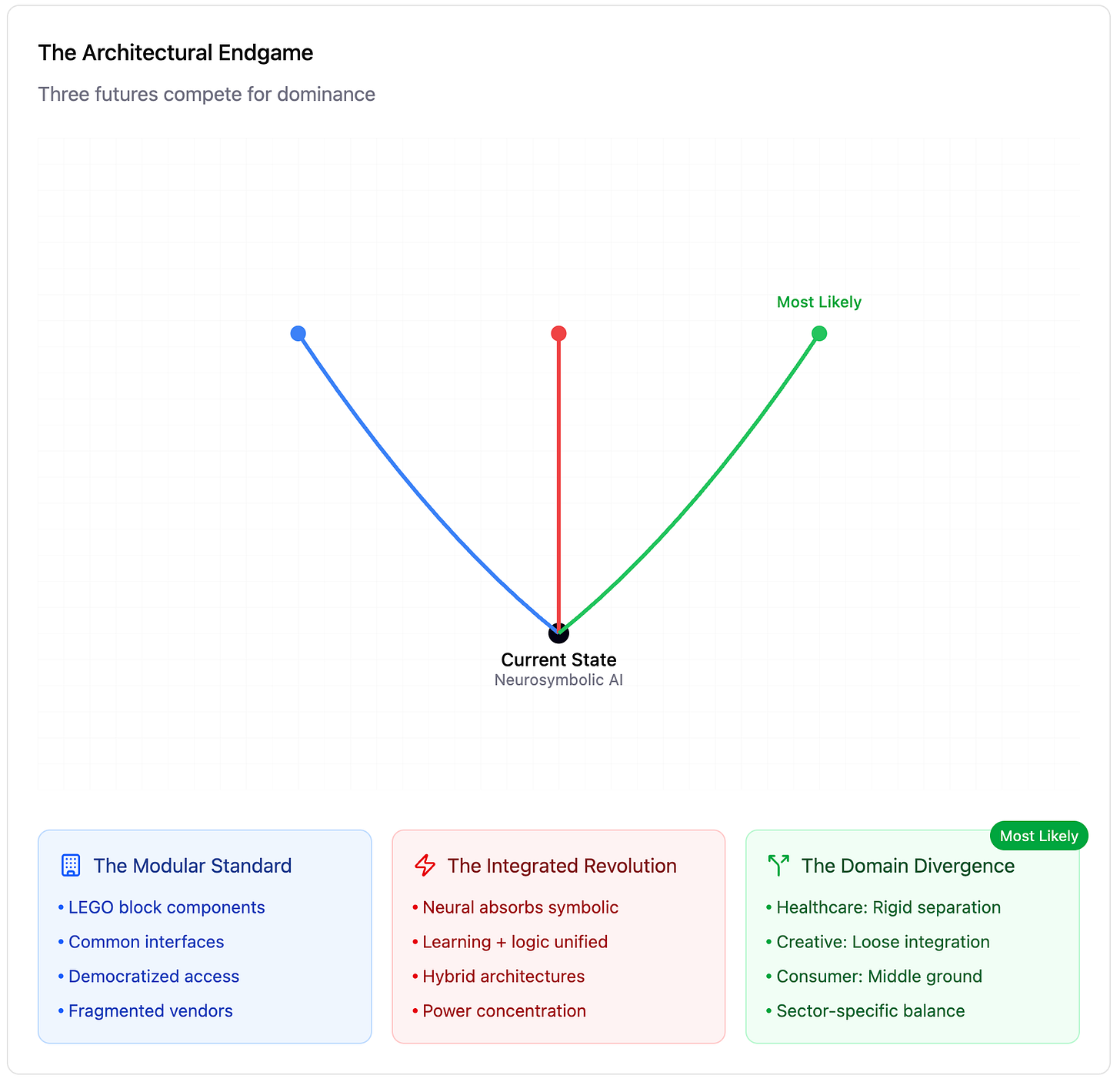

The Architectural Endgame

While some imagine modular standards or fully integrated architectures, the reality points toward domain divergence: Healthcare will demand rigid separation for auditability—every decision traceable through discrete logical steps. Creative industries will blur the boundaries, letting neural intuition guide with minimal symbolic constraints. Finance will find pragmatic middle ground, using tight symbolic controls for regulated decisions while letting neural networks roam free for market analysis.

The Trust Imperative

Here's where neurosymbolic AI's true disruption lies: it reframes the entire conversation about AI reliability. The question shifts from "How often is the AI right?" to "Can the AI guarantee it won't violate critical constraints?"

For regulated industries, this is survival. A financial AI that can't explain loan decisions faces regulatory extinction. A medical diagnostic system that might hallucinate treatments is legally radioactive. Neurosymbolic architectures offer the only viable path forward: intelligence with built-in accountability.

In a world increasingly dependent on automated decisions, the question isn't whether AI can be brilliant—it's whether we can trust it when it matters. Neurosymbolic AI makes machines more accountable.

Keep a lookout for the next edition of AI Uncovered!

Follow our social channels for more AI-related content: LinkedIn; Twitter (X); Bluesky; Threads; and Instagram.