Google’s AI Check Move: I/O 2025 and the Future of Assistants

At Google I/O 2025, the company put AI front and center. Nearly every major announcement revolved around Gemini, Google’s flagship AI model, and its deeper integration into products from Search to Android. The tone was ambitious yet optimistic – Sundar Pichai touted a “new phase” where AI becomes a collaborative “coworker and a superpower” in our daily lives.

Inside Gemini 2.5 – The Intelligence Behind the Shift

The Gemini 2.5 model family received notable upgrades, including a new “Deep Think” reasoning mode for more complex problem-solving and enhanced multimodal capabilities for seeing, speaking, and creating content. Google even framed these advances as steps toward “world models” – AI systems with a richer, human-like understanding of the world.

Nowhere is Google’s AI strategy more evident than in Search, its cornerstone product. The traditional “ten blue links” are evolving into a dynamic, conversational experience. AI-generated overviews that summarize search results (previously experimental) are rolling out globally, and Google reports 1.5 billion users already engaging with them.

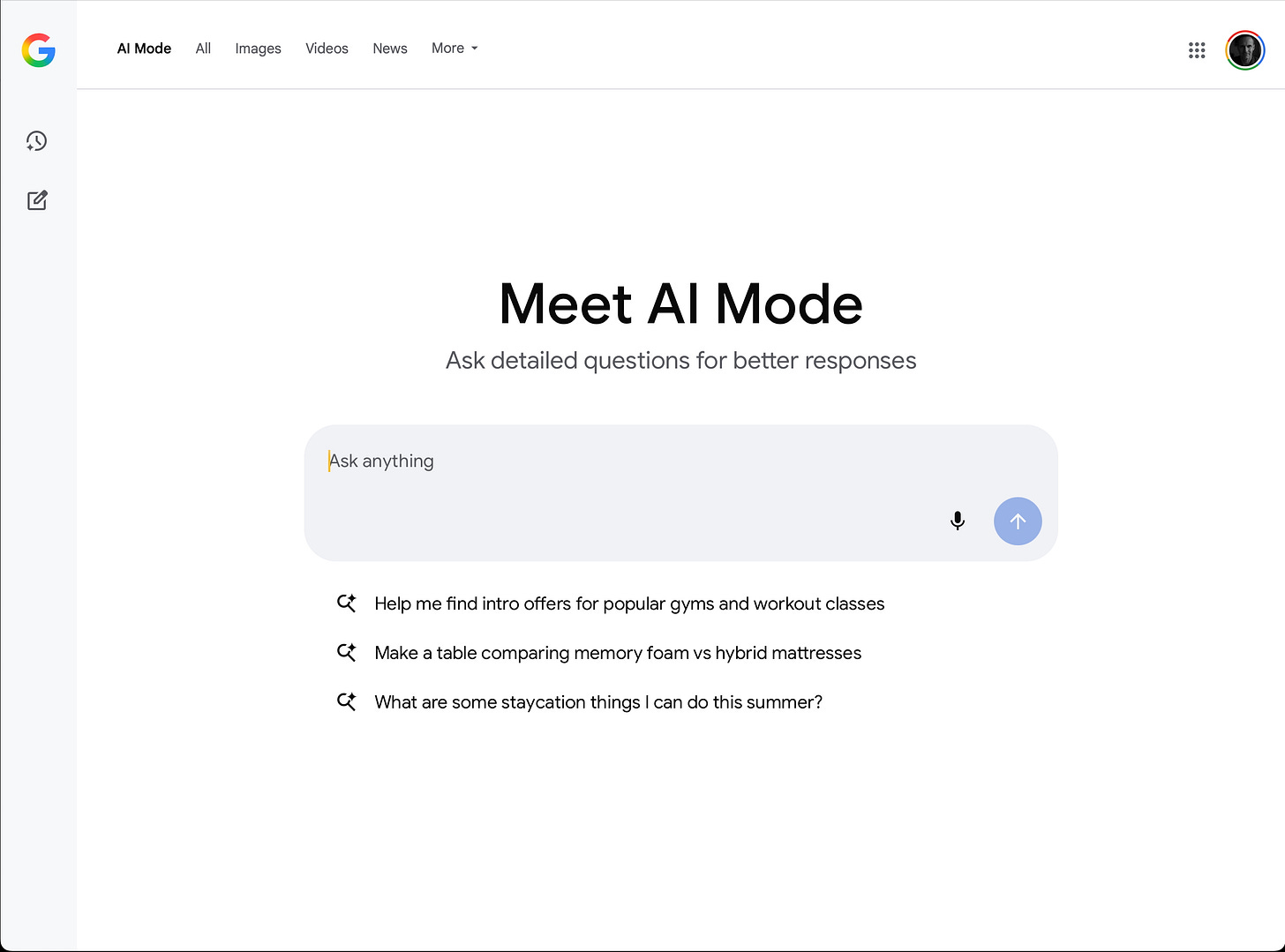

In the U.S., Google introduced a new “AI Mode” for Search – essentially a built-in chatbot mode – with advanced features. AI Mode is integrated directly into the search interface, making it effortless to access. AI Mode can break complex queries into sub-questions and synthesize answers with citations, handle real-time visual queries through your camera, and even perform tasks like booking reservations via agentic actions.

Google also showcased how Gemini is woven into its developer and consumer tools. For example, Google AI Studio now lets developers build with the Gemini API more easily, and Android is gaining AI features through Gemini-powered APIs and an AI Assistant replacement in the works.

During the keynote, Google previewed agents like Jules, an asynchronous coding assistant, and Stitch, a UI design generator, underscoring how AI will assist professionals in coding, design, and beyond. Powerful generative AI tools were highlighted as well – Imagen 4 for sharper image creation and Veo 3 for AI-generated video.

Monetization and Privacy Concerns

Google’s AI push is not just technological but also commercial. The company introduced new paid tiers for AI access, acknowledging that advanced AI features will be a premium product. A Google AI Pro plan ($20 per month) and a high-end AI Ultra plan ($250 per month) were announced. These subscriptions grant users access to more powerful Gemini models, faster response times, and extra features. For example, AI Ultra subscribers get the highest rate limits, early access to Gemini’s newest capabilities, and even perks like expanded cloud storage and ad-free YouTube.

The steep $249.99 price tag for AI Ultra raised some eyebrows. Google pitches it to enterprises and AI power-users – “for people that want to be on the absolute cutting edge of AI”, according to the Gemini team’s VP. Reactions were mixed: some in industry forums noted that for companies, such a subscription could make sense if it boosts productivity, while others questioned the value for such a high cost. The introduction of these tiers underlines Google’s strategy to monetize AI beyond ads, but it also positions advanced AI as something of a luxury service for now.

An assistant that works across your entire digital life naturally needs context—but that context comes at a cost. By default, Gemini collects and stores your interactions, along with device information, language settings, and general location. Google retains this data. Some conversations may also be reviewed by human annotators to improve system responses. In short: when you talk to Gemini, your words may be stored, analyzed, and used to refine the product.

Google is fairly blunt in its disclosure: “Don’t enter confidential information,” the support docs warn. If you wouldn’t email it to a stranger, you probably shouldn’t tell Gemini either.

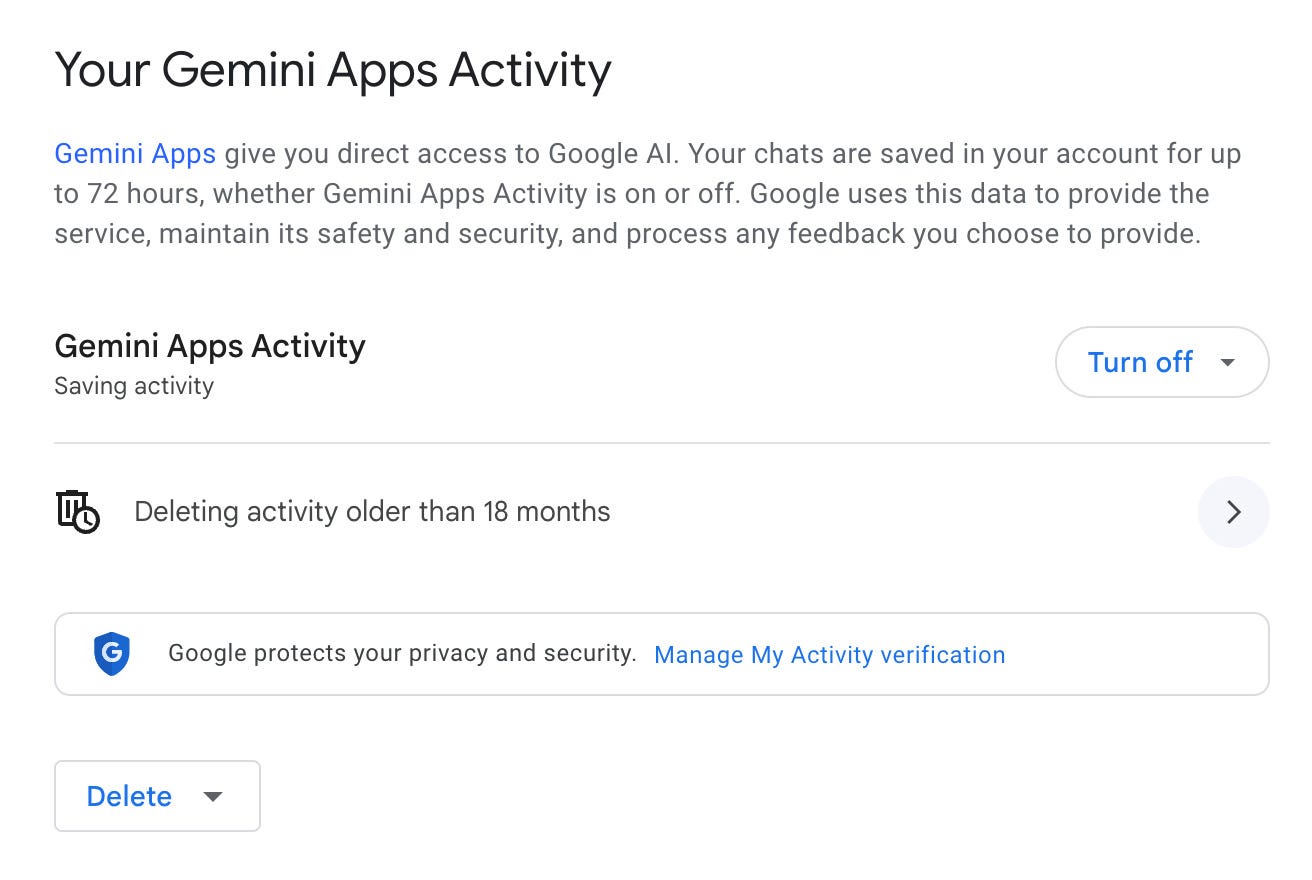

Users do have some control. In the My Activity dashboard, there’s a toggle called “Gemini Apps Activity”, which is on by default. Disabling it stops long-term logging of your Gemini chats, though it doesn’t eliminate all tracking. Even with history off, Google still retains the last 72 hours of conversation data, citing security and abuse monitoring.

You can also manually delete individual chats or clear all activity—similar to how YouTube or Search history can be wiped. But again, the assistant’s memory is never entirely zeroed out.

Gemini goes further when acting as your device assistant. If permissions are granted, it can tap into your calendar, contacts, precise location, app usage, and smart home devices. All of this fuels its ability to respond with context-aware suggestions—but also deepens its visibility into your personal life.

The message from Google is clear: the system works best when it knows more about you. Turn off tracking or deny permissions, and Gemini becomes less helpful. The trade-off is convenience versus control—one that’s familiar by now, but still worth scrutinizing.

Compared to peers like OpenAI, Google’s privacy positioning is competitive. The toggles exist. The permissions are (mostly) transparent. But the model still leans toward opt-in by default, and the most powerful features require a level of data sharing that not every user will be comfortable with.

For now, using Gemini means making a judgment call: how much are you willing to let it know in exchange for a smoother digital life? While users are familiar with privacy trade-offs from other digital platforms, AI demands deeper consideration due to its more intimate insights and predictive reach into personal lives.

Competition Heats Up: OpenAI, Anthropic, and Apple

Despite Google’s scale, the AI race is far from decided.

OpenAI made headlines recently by acquiring Jony Ive’s design firm IO in a $6.5B deal. Ive will help lead OpenAI’s push into hardware, potentially designing a new AI-native device—something CEO Sam Altman sees as a reimagining of the personal computer. While Google integrates AI into existing platforms, OpenAI may build a new hardware category from scratch. The implication: the next “smartphone moment” might not belong to Google.

Anthropic is pressing forward on raw model capability. Its new Claude 4 family—particularly Claude Opus 4—is being positioned as the most advanced coding model available, capable of long-term reasoning and autonomous agent workflows. The company’s focus on AI reliability and safety through its “Constitutional AI” approach appeals to businesses who prioritize trustworthy outputs. Gemini may be everywhere, but Claude is making a case for being smarter—and more predictable.

Apple, while behind in the AI race by many counts, is quietly building its own LLM strategy. With no proof yet that it has AI that can match the flexibility or power of Gemini or ChatGPT, all eyes are on WWDC for its next move.

What’s at Stake for Google

The AI race was never just about building the smartest model. It’s about distribution, integration, and trust—and Google is now trying to assert control across all three fronts.

Google, for now, has both a mature ecosystem and a fast-growing AI footprint. Gemini reportedly reaches over 400 million users and is integrated into products people already rely on—no new app, no change in habits, just ambient assistance baked into the workflow.

If Google continues on this trajectory, Gemini could become a default layer across platforms—possibly even on iPhones, where regulatory pressure in the EU might force Apple to allow alternative assistants.

Still, that future isn’t guaranteed. At I/O 2025, Google shifted from playing catch-up to setting a vision. Whether Gemini becomes the assistant—or just one of many—will depend on execution, user trust, and how competitors respond in the months ahead.

Keep a lookout for the next edition of AI Uncovered!

Follow our social channels for more AI-related content: LinkedIn; Twitter (X); Bluesky; Threads; and Instagram.