Anthropic Releases Much Awaited Claude 3.7 Sonnet

Anthropic's Claude 3.7 Sonnet model introduces hybrid reasoning with dual modes for quick and deep thinking.

Anthropic has recently released the highly anticipated Claude 3.7 Sonnet model, and it is a significant leap in Anthropic’s AI capabilities. As the latest release in the Claude series, it blends quick responsiveness with deep reasoning in a single system. Below, I explore its general overview and key capabilities, performance improvements, comparisons to the previous Claude 3.5, and potential real-world applications and industry impact.

General Overview & Key Capabilities

Claude 3.7 Sonnet is described as Anthropic’s most intelligent AI model to date, and notably the first hybrid reasoning model on the market. In practical terms, this means the model can function in two distinct modes within one system, rather than having separate AI models for different tasks.

Hybrid Reasoning (Dual Modes)

Claude 3.7 can deliver near-instant answers in a standard mode or perform extended, step-by-step reasoning in an “extended thinking” mode, all using the same unified model. This approach is inspired by how a human brain works – we use the same brain for quick answers and for solving complex problems, and Claude 3.7 mirrors this by integrating both fast and deep thinking capabilities in one AI. Users can toggle between the two modes as needed, choosing fast responses for simple prompts or more deliberative reasoning for complex queries.

When in extended thinking mode, Claude 3.7 actually works through problems step-by-step (planning, considering alternatives, doing intermediate calculations) and makes this reasoning visible to the user. This transparency allows users to follow the model’s thought process, increasing trust and making debugging of complex prompts easier.

Developers and advanced users have control over how long the model “thinks.” Through the API, one can specify a reasoning budget (in tokens) that Claude can use for its chain-of-thought before finalizing an answer. This means you can trade off speed vs. thoroughness: shorter reasoning for quick results or a larger budget for high-quality, detailed answers.

Improved Coding & Tool Use

Claude 3.7 Sonnet also shows especially strong capabilities in coding and software development tasks. It can handle complex codebases, plan code changes, and even integrate with development tools. In fact, along with the model, Anthropic introduced Claude Code, a command-line tool that leverages Claude 3.7 to assist with substantial engineering tasks directly from a developer’s terminal. This reflects the model’s advanced understanding of programming, enabling it to write code, debug, and use external tools as an AI pair programmer.

Despite its advanced nature, Claude 3.7 Sonnet is broadly accessible. It’s available across all Anthropic’s plans (Free, Pro, Team, Enterprise) and through major cloud platforms – including Amazon’s Bedrock and Google Cloud’s Vertex AI. (Extended thinking mode is available on all paid tiers; the free tier uses the standard mode.) Its integration into services like these means developers and businesses can start using Claude 3.7 easily via familiar platforms. For example, GitHub Copilot has incorporated Claude 3.7 Sonnet in its lineup for coding assistance.

Performance Improvements in Claude 3.7

With the release of Claude 3.7 Sonnet, users can expect substantial performance gains over previous versions in terms of speed, accuracy, reasoning depth, and comprehension.

Here are some of the notable improvements:

Speed and Responsiveness: In standard mode, Claude 3.7 is optimized for faster responses, delivering answers nearly instantaneously for straightforward queries. This makes interactive conversations feel snappy and efficient for the user. Despite the added capabilities, this model remains just as responsive as its predecessor when you need quick answers.

Deep Reasoning & Accuracy: When tackling complex problems, Claude 3.7’s extended thinking mode significantly boosts its accuracy and reasoning quality. By taking extra time to self-reflect and reason step-by-step, the model achieves better results on tasks like math problems, physics questions, and multi-step logical reasoning. In other words, it’s less likely to make a mistake on complicated queries because it can analyze them more thoroughly before answering. This hybrid approach means you get the best of both worlds: speed when you want it, and careful reasoning when you need it.

Stronger Problem-Solving and Planning: Claude 3.7 demonstrates a greater ability to break down complex tasks into steps and follow through on them than Claude 3.5 did. The model can understand a high-level request and plan out the necessary steps more effectively, which is crucial for tasks like writing long-form content, executing multi-step commands, or solving elaborate problems.

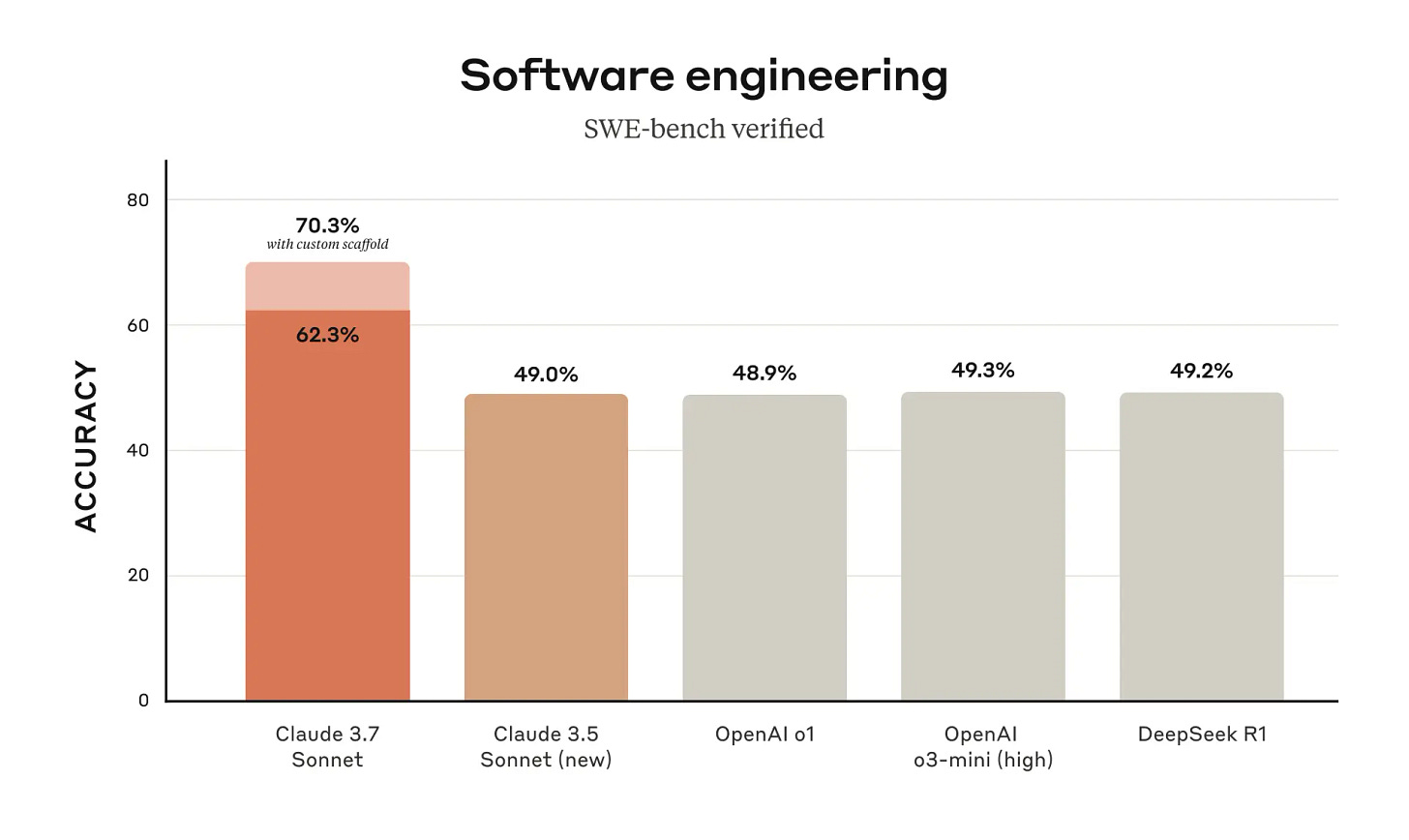

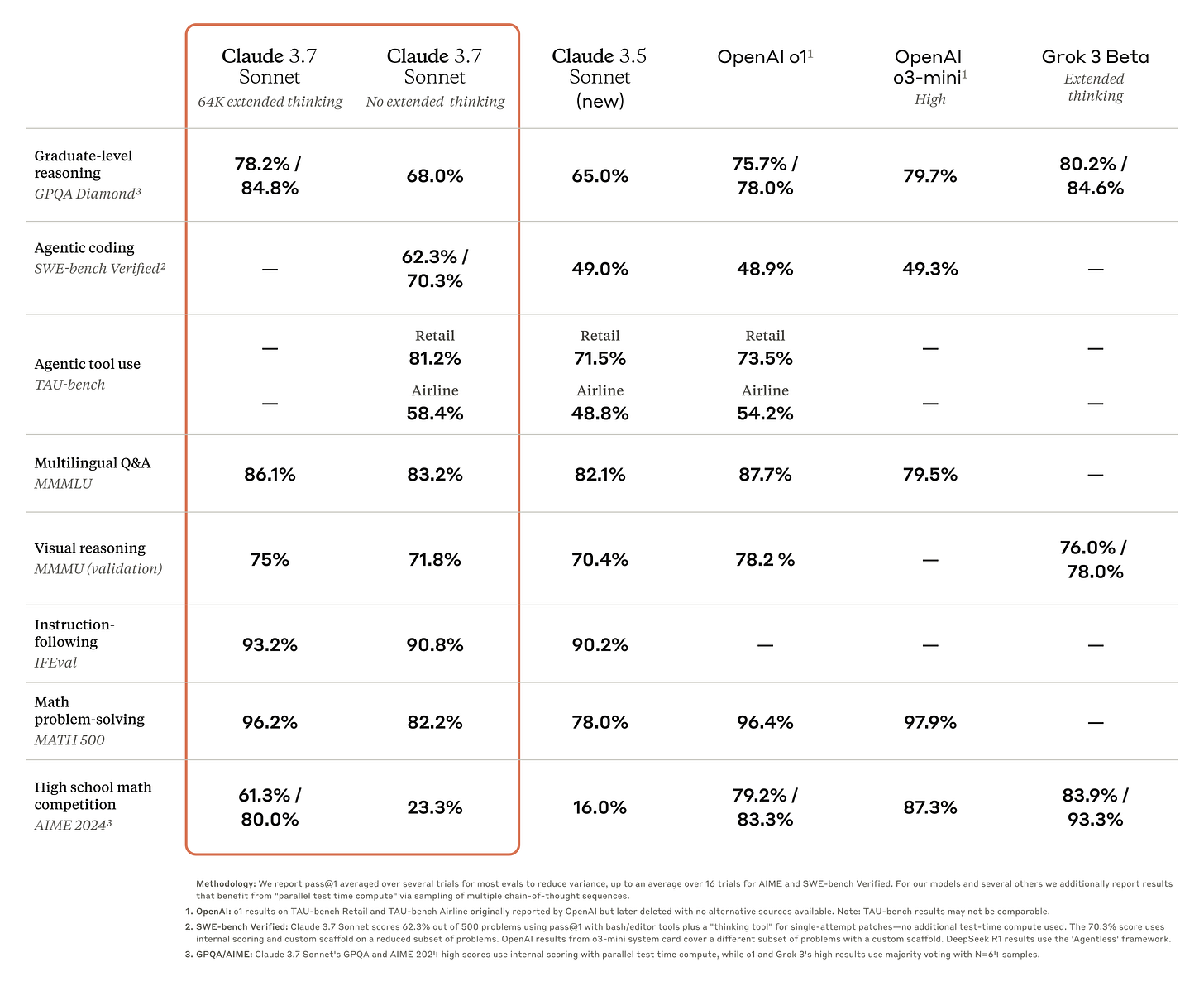

Enhanced Coding Capabilities: One area of dramatic improvement is coding. Claude 3.7 is currently Anthropic’s best model for programming tasks, excelling at reading and writing code, understanding code context, and creatively solving coding challenges. In benchmark tests, it achieved an industry-leading 70.3% success rate on the SWE-bench coding challenge (standard mode), which is a state-of-the-art result. This is a clear jump from Claude 3.5’s performance, and it translates to more reliable code generation and debugging.

Better Context Understanding: Claude 3.7 has shown improvements in keeping track of context and maintaining coherence over long interactions. Its comprehension of user intent and instructions is more nuanced, reducing instances where the AI might go off-track or give irrelevant answers. This is partly due to training focused on real-world tasks and feedback. One practical upshot is fewer misunderstandings: the model is less likely to provide an answer that misses the point of the question.

Larger Output and Memory: A notable upgrade is the model’s capacity to generate much longer responses. Claude 3.7 Sonnet can produce outputs over 15 times longer than Claude 3.5 could, supporting answers up to 128K tokens in length (for reference, that could be on the order of 100,000 words in a single response). This expanded output length means the model can enumerate detailed explanations, lengthy reports, or extensive code listings without truncating. It’s especially useful if you ask for comprehensive outputs – for example, summarizing a long document or providing multiple examples in one go. The model’s ability to handle such lengthy content without losing coherence represents a big step up in comprehension and consistency over long contexts.

Reliability and Safety: Alongside raw performance, Claude 3.7 has been tuned for better reliability. It makes more nuanced judgments about user requests, resulting in 45% fewer unnecessary refusals compared to Claude 3.5. In practical terms, the AI is better at saying “Yes” to safe requests that it previously might have incorrectly refused, all while still declining truly harmful or inappropriate prompts. This improvement reduces frustration for users who might have seen the model refuse benign queries in the past.

Overall, these performance improvements mean Claude 3.7 feels not only smarter but also more usable. It’s faster when you need speed, smarter when you need complex reasoning, and more capable across tasks ranging from writing code to understanding long documents.

Potential Applications & Industry Impact

The advancements in Claude 3.7 Sonnet open up new practical applications and benefits for both individual users and industries.

Here are a few ways businesses and users can leverage Claude 3.7 in real-world scenarios:

Software Development and Engineering

Perhaps the most immediate impact is in coding and software workflows. Claude 3.7’s superior coding ability makes it a powerful AI pair programmer. Developers can use it to generate code snippets, refactor existing code, or even draft entire functions/modules with fewer errors. Its integration into GitHub Copilot (in public preview) means developers on Copilot can choose Claude 3.7 as an AI assistant to get more accurate and context-aware suggestions while coding.

Companies like Replit have used Claude to build web apps and dashboards automatically from specifications, and internal tests showed it can handle full-stack updates and planning code edits better than other models. This translates to faster development cycles and lower engineering costs, as some of the grunt work can be offloaded to the AI.

Furthermore, with the introduction of Claude Code, developers can automate parts of the development pipeline (like running tests or committing code) through natural language commands, potentially speeding up the entire software development lifecycle. In fact, Amazon has integrated Claude 3.7 into its Amazon Bedrock service and even into an internal tool called Amazon Q to assist developers in complex coding tasks, highlighting its value in enterprise software workflows.

AI Agents and Workflow Automation

Claude 3.7’s hybrid reasoning and tool-using ability make it ideal for powering AI agents – systems that perform multi-step tasks autonomously. Businesses can deploy Claude 3.7 as the brain of an AI assistant that handles complex processes, from customer service bots that troubleshoot issues step-by-step, to virtual assistants that can schedule, calculate, or perform web research as part of a larger task.

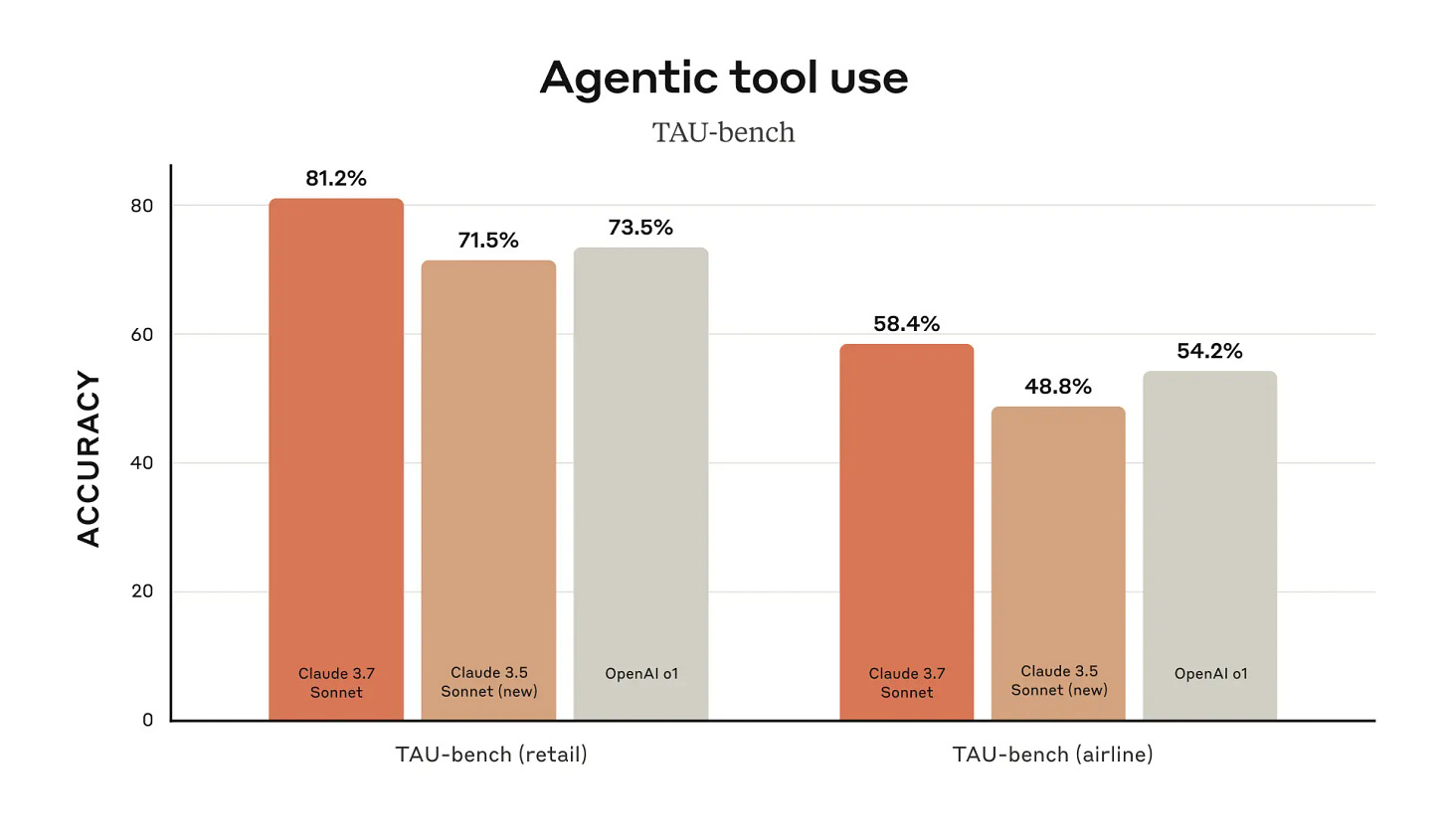

Its strong performance on benchmarks like TAU (which simulates complex real-world tool use cases) indicates it can manage sequences of actions reliably. For example, an IT helpdesk might use a Claude-based agent to walk through problem diagnosis and solution implementation, consulting documentation and executing commands in extended mode. Because Claude 3.7 can plan and reason out the procedure, it’s more likely to succeed in such tasks. This could reduce the need for human intervention in routine but complicated processes, improving efficiency.

Content Creation and Analysis

Writers, analysts, and content creators can gain from Claude 3.7’s enhanced language abilities. The model can generate well-structured, coherent content in various styles, which is useful for drafting articles, marketing copy, or even technical documentation. Its extended output capacity (up to 128K tokens) means it can produce or digest extremely large texts – think of summarizing a lengthy report or even a book, or writing a detailed research paper-style response in one go.

For businesses, this capability can be harnessed to automate report generation (e.g. compiling a detailed weekly report from bullet points), to summarize extensive documents (legal contracts, research literature, etc.), or to create personalized content at scale. Because Claude 3.7 also improved in following complex instructions (Claude 3.7 Sonnet is now available in GitHub Copilot in public preview - GitHub Changelog), a user can give it a detailed brief or outline, and it will adhere more closely to those requirements, resulting in content that needs less editing.

Customer Service and Conversational AI

Improved comprehension and reasoning allow Claude 3.7 to serve in advanced chatbot roles. In customer service, a Claude 3.7-powered bot could handle more intricate queries than before – for instance, helping a customer troubleshoot an issue with a product by asking clarifying questions and reasoning through a solution.

The fast response mode ensures customers get immediate answers to simple questions, while the extended mode can be invoked for unusual or complicated inquiries that need a careful approach. Its better understanding of context over long conversations means it can carry information from one part of a chat to later parts more reliably, making the interaction feel more natural and human-like. Businesses leveraging Claude 3.7 in call centers or online support chatbots may see higher resolution rates and customer satisfaction, thanks to the model’s blend of speed and smarts.

Research and Education

In fields like scientific research or education, Claude 3.7 can be a valuable assistant. Researchers can use the model to explore hypotheses by asking the AI to work through scientific problems or analyze datasets (with the AI describing each step of reasoning in extended mode). Its strong performance in math and physics problem-solving means it can assist with checking work or suggesting approaches to complex equations.

Educators or students might use Claude 3.7 as a tutor that can break down difficult concepts into simpler explanations, or to generate practice problems and detailed solutions. The industry impact here is an AI that can augment human expertise – helping professionals to not only get answers but to understand the why and how behind those answers, due to the model’s ability to explain its reasoning.

Across industries, the common theme is that Claude 3.7 Sonnet enables more advanced and reliable AI assistance in tasks that were previously too complex for AI alone. Its introduction of hybrid reasoning lowers the barrier between quick AI helpers and deep thinkers – now one system can adapt on the fly, which can streamline workflows and open up new use cases. Businesses adopting Claude 3.7 can automate more without sacrificing quality, whether it’s generating production-ready code with fewer errors or handling intricate decision-making processes in a business setting.

The Bottom Line

Claude 3.7 Sonnet gives us a balanced advancement in AI: it's both faster and smarter, combining technical prowess with practical usability. By building on the foundation of Claude 3.5 and addressing its limitations, Anthropic has delivered a model that can cater to a wide range of needs – from everyday questions to specialized professional tasks – all while maintaining clarity, reliability, and an engaging user experience.

What's particularly noteworthy is the accelerating pace of AI development that Claude 3.7 (and LLMs in general) represents. The capabilities improved significantly across multiple dimensions – reasoning depth, coding proficiency, context understanding, and output length. This rapid evolution suggests we're in a period of unprecedented acceleration toward AGI.

This acceleration raises important questions about the timeline for Artificial General Intelligence (AGI). If models continue improving at this rate – gaining both specialized capabilities and generalized reasoning abilities – we may reach AGI-level systems sooner than many experts previously anticipated. The integration of fast and deep thinking modes in a single model is particularly significant, as it begins to mirror how human cognition operates across different contexts and complexity levels. Each new model seems to close the gap between narrow AI and more general intelligence, suggesting that the path to AGI might be shorter than we thought even a year ago.

However, it also emphasizes the importance of responsible AI development as we potentially approach AGI capabilities faster than expected. The balance that Anthropic has struck between advancing capabilities and maintaining reliability and safety will be increasingly important in the coming iterations of AI technology.

Keep a lookout for the next edition of AI Uncovered!

Follow our social channels for more AI-related content: LinkedIn; Twitter (X); Bluesky; Threads; and Instagram.