AI-Generated Media in Politics

What’s this about: AI-generated media is one tool in the AI arsenal being deployed by governments and independent actors all across the globe. This new and effective tool is often used to target political parties and figures. At the same time, AI-generated media can help political parties become more in touch with their base, and it’s a key tool in the fight against disinformation. With a political environment as strained as ours, one single piece of fake media can throw our world upside down, which is why it’s crucial for us to understand its involvement in today’s politics.

Before reading this piece, make sure to check out:

Deepfakes in Politics

Deepfakes, which are usually a type of fake media involving images and videos, have been making a lot of headlines over the last few years, and not for good reasons. They are increasingly being deployed by bad actors to trick populations into believing a video, statement, or image is real when in fact it’s not. Deepfakes involve the use of deep learning algorithms to create a type of fake media content, usually a video with or without audio. This fake media is fabricated to make it look like someone said or did something that they did not. You can see how this could have major implications on the world stage.

>>> Check out my previous article “Modern Information Wars” to learn more about deepfakes.

The world of politics is getting even stranger than it already is, which seems nearly impossible. The political realm is dipping deeper into the uncanny valley thanks to things like deepfakes.

Just take a look at South Korea, which witnessed a very unique use of AI-generated media when it held its presidential elections on March 9. Opposition leader Yoon Suk-yeol came away victorious after his campaign involved a unique strategy…to say the least. His team relied on deepfake technology to create an “AI avatar” of the candidate, which was then deployed to interact with potential voters. With that said, the questions had very little to do with actual policy.

Check out one of the potential voter’s questions and the avatar’s response:

Q: “President Moon Jae-in and (rival presidential candidate) Lee Jae-myung are drowning. Who do you save?”

A: “I’d wish them both good luck.”

To train the avatar, the team used just 20 hours of recorded audio and video from the real Yoon. They say that AI Yoon is the first-ever deepfake candidate.

It’s not easy to tell just how much of an impact the deepfake had on Yoon’s campaign, but it obviously benefited the candidate. Videos of the AI candidate attracted millions of views, and tens of thousands of people asked it questions. The election was the closest presidential election in South Korea’s history, with Yoon receiving 48.56% of the popular vote. Who’s to say the deepfake Yoon didn’t tip the scales?

Yoon’s campaign could be onto something here. Deepfake technology could help political candidates create avatars of themselves that then speak to their base. If the deepfake candidates are truly focused on the issues and represent the political figure accurately, they could be a very effective tool that allows more accessibility to the candidate.

South Korea is not alone. Here is a look at some other countries recently impacted by deepfakes:

Malaysia: In Malysia, deepfakes were deployed to create a false video of the Economic Affairs Minister having sex, which damaged his reputation.

Gabon: In the central African country of Gabon, leader Ali Bongo was a target of a deepfake video that suggested he was not healthy enough to hold office. This had major consequences, leading to the military launching an unsuccessful coup attempt.

Belgium: In Belgium, a political group released a deepfake video showing the Prime Minister giving a speech that linked COVID-19 to environmental damage.

The European Union (EU) is also seeing an increased use of deepfakes to sow doubt. In April 2021, a group of European MPs were approached by individuals using deepfake filters to imitate Russian opposition figures during video calls. These fake meetings were aimed at discrediting Russian opposition by Alexi Navalny and his team.

We can expect to see more of these types of infiltrations throughout politics all across the globe.

According to a 2020 report by The Brookings Institute, deepfakes will likely distort the democratic discourse as well as erode trust in public institutions at large.

AI Text Generation and Bots

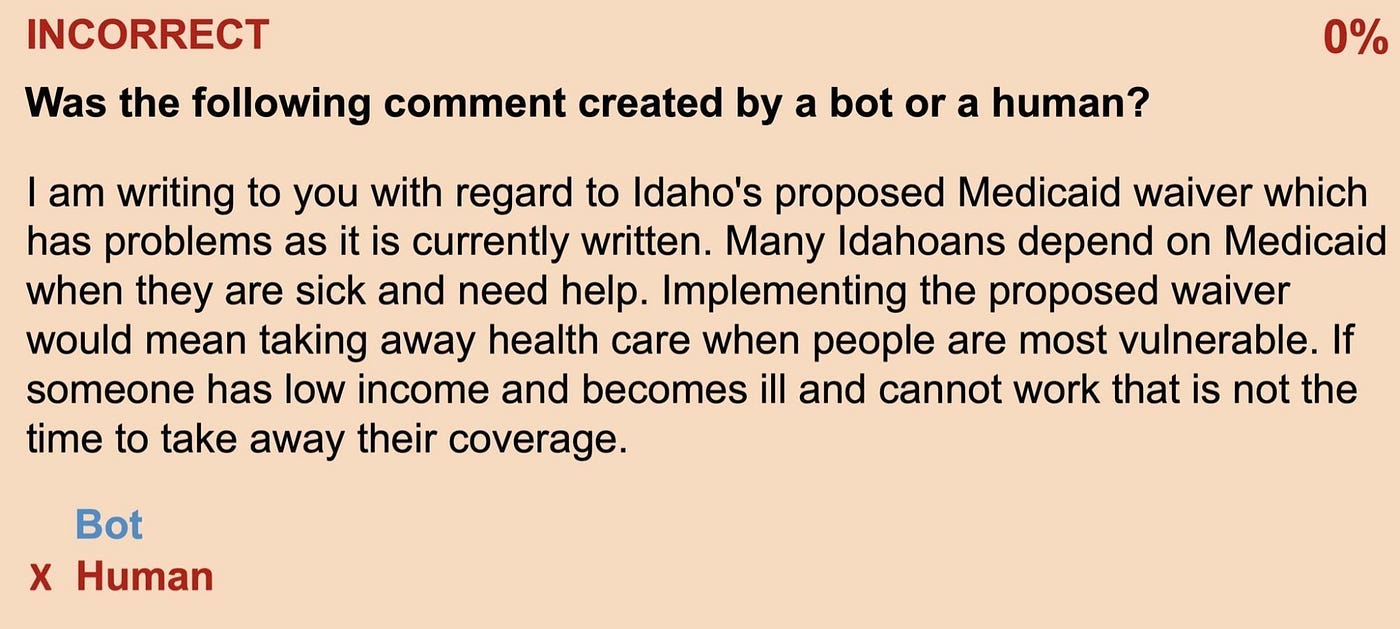

Artificial text generation and fake bots are also being used to skew political discourse as well, and they are only getting more sophisticated as time goes on. Back in 2019, Harvard senior Max Weiss showed just how dangerous this AI-generated text could be when he used a text-generation program to develop 1,000 highly unique comments that were deployed as a response to a government call on a Medicaid issue.

Each comment easily passed as a real human advocating for a specific policy position, and Medicaid.gov administrators accepted them as legitimate concerns. While Weiss was able to inform the proper administrators that the comments were fake and needed to be removed, you can see how an actual malicious actor could deploy such technology to harm public policy.

Take a look at this example deepfake text that was generated by the algorithm:

When it comes to algorithmic bots, they currently have the biggest impact in the social media realm, with countless bots taking on fake names, biographics, and photos. These are often deployed to distort political debate, and they make it extremely hard for any of us to determine what the actual public sentiment is.

While researchers can often detect these bots fairly easily (just take a look at your own Twitter followers and you can probably do the same), AI bot technology is constantly improving. What’s more concerning is that it’s improving at a faster rate than our detection technologies, so it will only continue to have a greater impact on politics.

Fake Local News Outlets

Another tactic that’s being increasingly used to sow disinformation during major events like electoral processes is the creation of partisan outlets that pretend to be local news organizations. In other words, AI is being used to create a completely fake version of your local paper.

Back in 2019, the Tow Center for Digital Journalism at Columbia Journalism School found at least 450 websites in a network of local and business news organizations. Each one of these organizations was using algorithms to generate a large number of articles and even some reported stories.

These networks of fake local news outlets can be used to stage events, garner attention on controversial issues, and much more. Oftentimes, they present themselves as politically neutral when in fact they’re not. All of these tactics allow the fake news organizations to gather valuable data from users, which can then be used for political targeting.

Microtargeting in Politics

There is a growing concern among experts over a new threat involving microtargeting techniques. Political microtargeting technique (PMT) is a relatively new technique used by political campaigns all across the globe. It is a type of personalized communication involving the collection of data about people, which is then used to show them targeted political advertisements. When paired with deepfakes or other forms of fake media, malicious actors have a brand new PMT tactic that opens up many possibilities.

What makes fake media PMTs unique? These malicious actors can use algorithms to personalize the fake media based on each targeted individual. It enables them to expose the population to tailored messages, which in theory will amplify the effects of the tailored messages. By only receiving messages that are personally relevant, these individuals will likely become more engaged when compared to a generic message.

An individual might not be susceptible to one specific fake media message but can be susceptible to another one that is tailored to them personally. This means those who create some form of fake media will eventually become good at sending the “right” one to the “right” person, which makes fake media all the more riskier in politics.

Disrupting World Politics

As you might’ve guessed, world politics is a perfect victim for AI-enabled fake media. The political environment is far too often based on lies and disinformation, so these technologies provide bad actors with a way to take this one step further. They are continuing to improve and emerge as a 21st century threat, and they could have an unprecedented impact on democratic elections and processes.

But how exactly can fake media disrupt world politics?

For one, they can be used to create false accusations and narratives. This can be achieved many ways, including by slightly altering a candidate’s authentic speech to make it mean something totally different. This could impact a candidate’s image and change the public’s view of them in regard to character, fitness, and mental health.

As we mentioned before, this is a global problem. It’s not only occurring in the United States.

According to a 2018 Reuters Institute Digital News Report, the United States didn’t even land in the top three countries where exposure to fake news is the highest.

AI as a Tool for Good

AI isn’t only used by malicious actors to target populations or public figures. It is also an extremely effective tool that can help political campaigns better understand potential voters and their concerns.

AI can help generate all sorts of media that is personalized to each voter. Whether it’s the right approach or not, voters often focus on a few main issues, so they tune out when the conversation is not applicable to them. AI-generated media could help keep these potential voters engaged while also helping politicians address a wide-range of issues that affect a large number of people.

I always like to say that the same AI technology that enables things like malicious fake media is the same technology that should be used against it. AI is truly the key to helping entire populations know when something is real or fake, but it’s not that simple. It can take a turn very fast and start approaching unethical censorship, so there must be a balance between privacy, individual security, digital freedoms, and AI-enabled solutions. All of this means governments and organizations across the globe must recognize the threat of AI-enabled media while dedicating enough resources to its detection and development for positive applications.

At the same time, it’s crucial for citizens to educate themselves on the topics of AI, media, deepfakes, and reliable news sources. AI can sometimes seem extraordinarily complex, but anyone can learn the basics. There are countless resources online, such as some of my previous articles like “What is Deep Learning” and “What is Natural Language Processing.”

AI is not only a tool for governments, businesses, and malicious actors. By understanding what AI is, citizens can arm themselves with the power of information and knowledge. It will enable anyone to be better prepared to detect AI-generated media on their own. And if this is the case, they are already ahead of the game. Paired with “AI for good” tools, we can create a strong defense against any malicious uses.

AI-generated media will only continue to grow in popularity and accessibility, along with its use as a weapon. We must be equally prepared to defend and promote a diverse democracy with it. As we dive deeper into an AI-driven world, the stakes only get higher.