Top 5 AI Agent Platforms You Should Know

This article reviews five AI agent platforms: crewAI, AutoGen, LangChain, Vertex AI Agent Builder, and Cogniflow.

AI agents are autonomous programs designed to perceive their environment and take actions to achieve specific goals. They're now accessible to enterprises of all sizes, thanks to the proliferation of powerful platforms for building and deploying these agents. These platforms are democratizing AI, enabling organizations to harness cutting-edge technology without requiring deep expertise in machine learning or neural network architecture.

The importance of these platforms cannot be overstated. They're not just tools; they're catalysts for innovation, enabling enterprises to:

Rapidly prototype and deploy AI solutions

Customize agents for specific industry needs

Scale AI capabilities across the organization

Integrate advanced AI functionalities into existing systems

As we delve into the top platforms for building AI agents, we'll explore how each can empower your enterprise to stay at the forefront of AI development.

But before diving in, make sure to check out my “Intro to AI Agents and Architectures” to gain a deeper understanding.

1. crewAI

crewAI is an innovative open-source framework designed to facilitate the creation of sophisticated multi-agent AI systems.

Key Features:

Role-based agent design with customizable goals and backstories

Flexible memory system (short-term, long-term, and shared)

Extensible tools framework

Multi-agent collaboration in series, parallel, or hierarchically

Built-in guardrails and error handling

Use Cases and Benefits:

Project Management: crewAI can simulate a project management team, with different agents handling scheduling, resource allocation, and risk assessment.

Financial Analysis: Agents can be assigned roles like market analyst, risk assessor, and investment strategist, working together to provide comprehensive financial insights.

Content Creation: A crew of agents can collaborate on content creation, with roles for research, writing, editing, and SEO optimization.

Limitations and Scale:

While crewAI is a powerful framework for building multi-agent AI systems, it comes with certain limitations:

Requires programming expertise, limiting accessibility for non-technical users

Lacks built-in security features like data encryption and OAuth authentication

No hosted solutions, requiring developers to manage deployment and scaling

Can be more resource-intensive due to multiple agent interactions

Complexity in memory management for agents

The maximum scale of projects that crewAI can handle cannot be definitively stated. However, its architecture suggests it can manage complex, sizable projects with careful design and resource management. It may be more suitable for small to medium-scale projects rather than full-scale enterprise solutions without significant customization.

Integration Capabilities:

crewAI offers several integration options for proprietary platforms:

Webhooks support (in crewAI+)

gRPC support for high-performance remote procedure calls

API creation capabilities (in crewAI+)

Environment variable configuration for easy deployment

Custom tools creation for interaction with proprietary systems

These features allow for flexible integration with diverse systems, though the exact process may vary depending on the specific proprietary platform.

Pricing:

crewAI offers a free open-source version available on GitHub. However, specific pricing information for enterprise use (crewAI+) depends on each case. Users should consider additional costs such as API usage for language models and compute resources. For detailed pricing and terms, it's recommended to contact crewAI's team directly or check their official website for the most up-to-date information.

What Makes crewAI Stand Out?

crewAI's standout feature remains its role-based agent design, enabling the creation of highly specialized AI teams capable of handling complex workflows that require diverse expertise and perspectives. However, potential users should carefully consider the technical requirements and limitations when evaluating crewAI for their projects.

2. AutoGen

AutoGen, developed by Microsoft, is an open-source framework that's pushing the boundaries of what's possible with AI agents in enterprise environments.

Key Features:

Multi-agent architecture for complex problem-solving

Customizable and conversable agents

Seamless integration with various large language models (LLMs)

Code generation and execution capabilities

Flexible human-in-the-loop functionality

Use Cases and Benefits:

Software Development: AutoGen's code generation and execution capabilities make it ideal for assisting developers, automating code reviews, and prototyping applications.

Data Analysis: Its multi-agent architecture allows for sophisticated data processing pipelines, where different agents can handle data cleaning, analysis, and visualization tasks.

Customer Service: AutoGen can power advanced chatbots that can understand context, generate appropriate responses, and execute actions on behalf of customers.

Limitations and Scale:

While AutoGen is powerful, it faces several challenges for large-scale enterprise solutions:

Complexity and inconsistency at scale, especially for production-ready applications

High costs and token limits when using powerful models like GPT-4

Context window limitations that may impact analysis of large volumes of content

Significant effort required to achieve enterprise-grade robustness, security, and scalability

AutoGen may be best suited for small to medium-scale applications rather than large enterprise-wide solutions without careful design and testing.

Integration Capabilities:

AutoGen's open-source nature provides flexibility for customization. It supports:

Containerized code execution, potentially allowing interaction with proprietary APIs

Customizable workflows and agent definitions

Integration with cloud services like Azure OpenAI Service

Developers can design agents and conversation flows tailored to work with specific proprietary platforms, though this may require custom development work.

Azure Integration:

AutoGen is integrated with Azure in several ways:

Supports models deployed on Azure OpenAI Service

Can be deployed to Azure Container Apps

Works in conjunction with Azure Cognitive Search for data extraction

Can invoke Azure Logic Apps workflows as functions

LLM Support:

AutoGen supports a wide range of LLMs beyond just OpenAI's models:

Open-source LLMs via Hugging Face Transformers (e.g., LLaMA, Alpaca, Falcon)

FastChat models as a local replacement for OpenAI APIs

Azure OpenAI Service models

Google Gemini models, including multi-modal capabilities

Models from Anthropic, Mistral AI, Together.AI, and Groq

LLM Studio for using downloaded language models

This diverse LLM support allows developers to mix and match models based on specific needs and cost considerations.

Pricing:

AutoGen is an open-source framework available for free on GitHub. However, users should consider costs associated with:

API usage for commercial AI models

Compute resources needed to run agents

What Makes AutoGen Stand Out?

AutoGen's standout feature remains its multi-agent conversation framework, enabling complex and nuanced problem-solving. However, potential users should carefully consider the technical requirements, limitations, and associated costs when evaluating AutoGen for their projects.

3. LangChain

LangChain is a versatile framework that simplifies the process of building applications powered by language models.

Key Features:

Modular and extensible architecture

Unified interface for multiple LLM providers

Rich collection of pre-built components (prompts, parsers, vector stores)

Agent functionality for complex task execution

Sophisticated memory management for maintaining context

Use Cases and Benefits:

Document Analysis: LangChain excels at tasks like summarization, entity extraction, and sentiment analysis across large document sets.

Contextual Chatbots: Its memory management capabilities enable the creation of chatbots that maintain context over long conversations.

Research Assistants: LangChain can power AI agents that can search, synthesize, and present information from various sources.

Limitations and Scale:

While LangChain is powerful, it faces several challenges for large-scale enterprise solutions:

Lack of production-readiness: Described as a "side project" that is constantly changing, with unpatched vulnerabilities.

Complexity and inconsistency at scale: Performance can be inconsistent for complex, production-ready applications.

High costs: Running LangChain-based systems can be expensive for large-scale deployments due to API usage, hosting, and GPU costs.

Rate limits: API rate limits can be problematic for high-traffic enterprise applications.

Memory management challenges: Efficiently managing agent memory at scale is difficult.

LangChain may be best suited for small to medium-scale applications rather than large enterprise-wide solutions without careful design and testing.

Integration Capabilities:

LangChain offers several options for integrating AI agents into proprietary platforms:

API Integration: Provides APIs to connect and query LLMs from existing code.

Modular Architecture: Specific components can be incorporated into existing systems.

Customizable Agents and Tools: Can be designed to interact with proprietary APIs and services.

Open Source Framework: Allows modification and extension to align with proprietary systems.

LLM Support:

LangChain supports a wide variety of LLM providers and models:

OpenAI (including GPT-3 and GPT-4)

Hugging Face models (e.g., BLOOM, GPT-Neo)

Cohere

AI21 (e.g., Jurassic-1)

Anthropic (e.g., Claude)

Azure OpenAI

Google PaLM

Replicate

Open-source models (e.g., GPT-J, GPT-NeoX, BLOOM) for local running

This broad support allows developers to choose the most suitable models for their applications while leveraging LangChain's abstractions and utilities.

Pricing:

LangChain offers multiple pricing tiers:

Free Developer plan: First 10k traces included per month

Plus plan: $39 per seat/month, with 10k free traces per month

Enterprise plan: Custom pricing

Additional traces are billed at $0.50 per 1k base traces (14-day retention) or $5 per 1k traces for extended 400-day retention.

A "trace" is one complete invocation of an application chain, agent, evaluator run, or playground run in LangChain. A single trace can include many LLM calls or other tracked events.

The official pricing details can be found on the LangChain website's dedicated pricing page.

Note that while the core LangChain framework is open-source and free, using it with paid LLM APIs will incur those API costs.

What Makes LangChain Stand Out?

LangChain's standout feature is its flexibility and extensibility. The framework's modular design allows developers to easily swap out components, integrate with various LLM providers, and extend functionality with custom tools. This makes LangChain exceptionally adaptable to a wide range of enterprise needs, from simple automation tasks to complex, multi-step workflows involving multiple AI technologies. However, potential users should carefully consider the technical requirements, limitations, and associated costs when evaluating LangChain for their projects, especially for large-scale enterprise deployments.

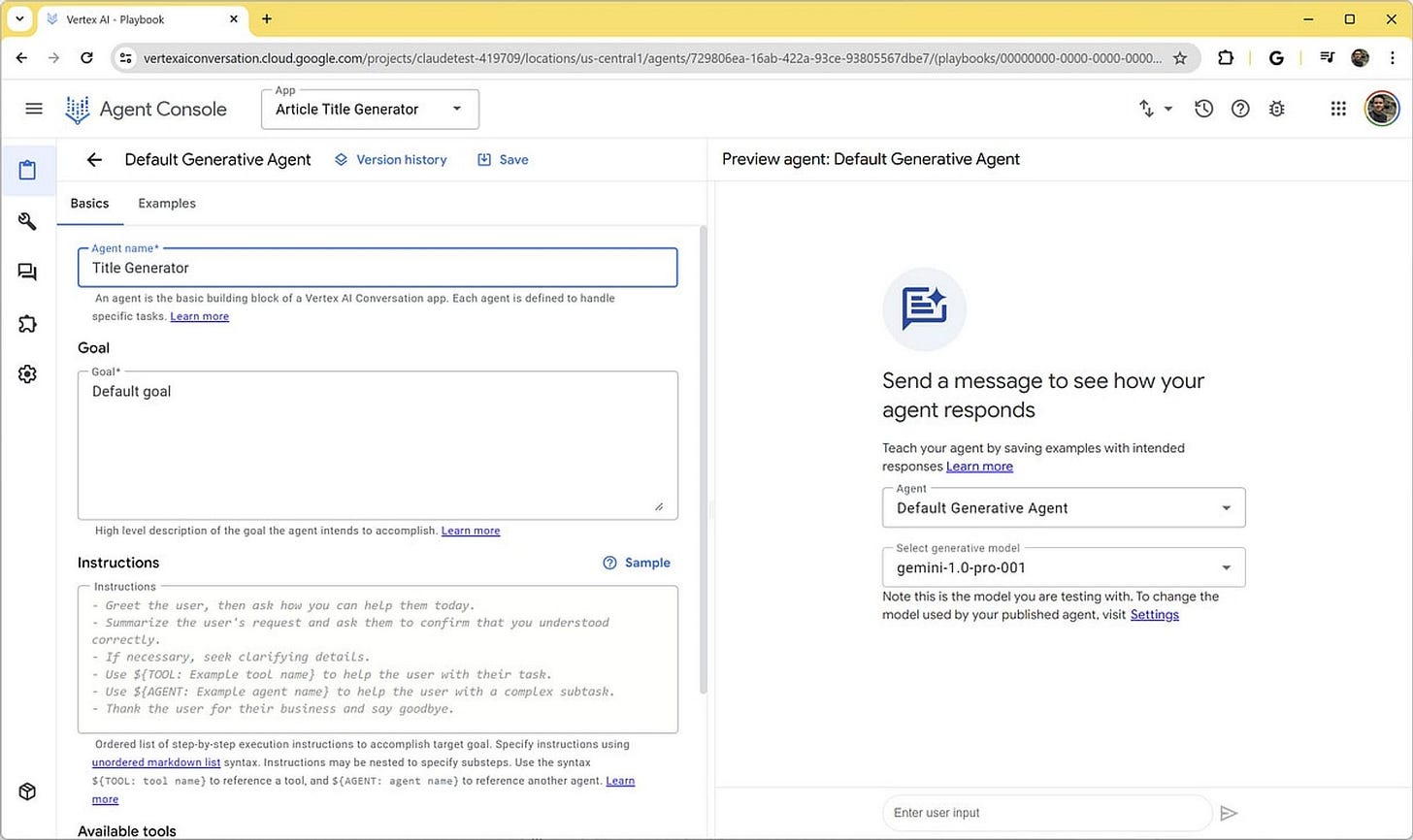

4. Vertex AI Agent Builder

Vertex AI Agent Builder, a product of Google Cloud, offers a robust platform for creating enterprise-grade generative AI applications without deep machine learning expertise.

Key Features:

No-code console for rapid agent development

Advanced frameworks support (e.g., LangChain) for complex use cases

Integration with Google's foundation models and search capabilities

Enterprise data grounding for accurate and contextual responses

Function calls and pre-built extension modules

Enterprise-grade security and compliance features

Use Cases and Benefits:

Customer Support: Create chatbots that can access company knowledge bases and provide accurate, contextual responses.

Internal Knowledge Management: Develop agents that can search and synthesize information across various enterprise data sources.

Process Automation: Build agents that can understand complex requests and execute multi-step processes across different systems.

Limitations and Scale:

While Vertex AI Agent Builder is designed for enterprise use, it has certain limitations:

Quotas and limits on resources (e.g., 1,000,000 documents per project, 300 query requests per minute per project)

Lack of detailed case studies demonstrating large-scale enterprise deployments

May require additional customization and infrastructure work for very large-scale applications

The platform seems well-suited for small to medium-scale applications, with the potential for larger deployments by working with Google Cloud to increase quotas.

Integration Capabilities:

Vertex AI Agent Builder offers multiple integration options for proprietary platforms:

APIs for search, chat, recommendations, and other AI capabilities

Webhooks for real-time integration with proprietary workflows

Embeddable widgets for quick UI integration

Serverless deployment via Google Cloud Run

Connectors for enterprise data sources (e.g., Jira, ServiceNow, Hadoop)

LangChain integration for advanced customization

Firebase and mobile SDKs for mobile app integration

Pricing:

The pricing structure of Vertex AI:

Vertex AI Agents Chat: $12.00 per 1,000 queries

Vertex AI Agents Voice: $0.002 per second

Vertex AI Search (Standard Edition): $2.00 per 1,000 queries

Free tier available with usage limits

Partner model pricing (e.g., Anthropic's Claude) based on input and output tokens

What Makes Vertex AI Stand Out?

Vertex AI Agent Builder's standout feature is its seamless integration with enterprise data sources and systems. This "grounding" in trusted enterprise data ensures that AI agents provide accurate, up-to-date, and compliant responses. Combined with its enterprise-grade security features and compliance with industry standards like HIPAA and ISO, this makes Vertex AI Agent Builder particularly suitable for organizations with complex data ecosystems and stringent regulatory requirements.

The platform's no-code options and pre-built templates accelerate development, while more advanced users can leverage LangChain integration for custom workflows. This flexibility, coupled with Google Cloud's scalable infrastructure, positions Vertex AI Agent Builder as a strong contender for businesses looking to deploy AI solutions across various scales, from small departmental projects to potentially larger enterprise-wide initiatives.

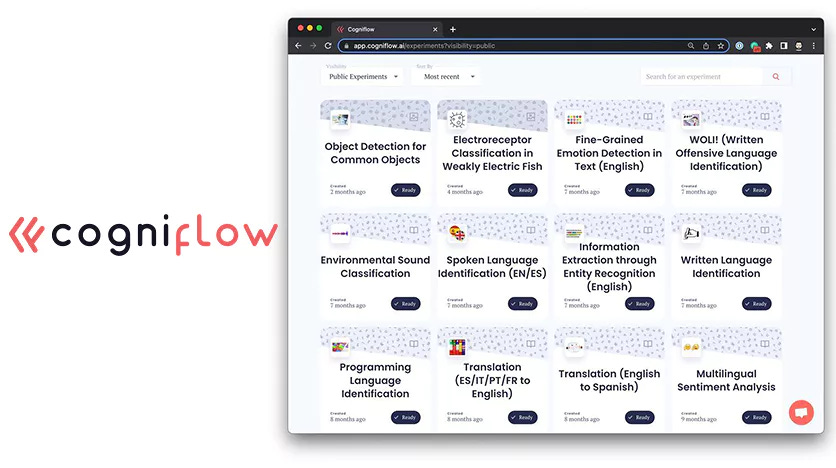

5. Cogniflow

Cogniflow offers a no-code AI platform that democratizes AI development, allowing users to build and deploy AI models without coding expertise.

Key Features:

Intuitive drag-and-drop interface for model building

Support for various data types (text, images, audio, video)

Marketplace of pre-trained models for common use cases

Seamless integration with popular business applications

Collaboration and sharing capabilities

Use Cases and Benefits:

Predictive Maintenance: Build models that analyze sensor data to predict equipment failures before they occur.

Content Moderation: Develop AI agents that can automatically filter and categorize user-generated content across various media types.

Market Trend Analysis: Create models that process diverse data sources to identify emerging market trends and consumer behaviors.

Limitations and Scale:

While Cogniflow is designed for ease of use, it has some limitations for large-scale enterprise solutions:

No-code nature may limit customization for very advanced or niche enterprise use cases

Lack of detailed case studies demonstrating large-scale, complex enterprise deployments

Highest standard tier supports 5 million credits/month, which may require custom contracts for larger deployments

Optimized for accessibility and speed, which may involve trade-offs for maximum complexity and scale

Cogniflow appears well-suited for small to medium-scale AI initiatives and many common enterprise AI scenarios. Larger or more complex deployments may require custom enterprise solutions.

Integration Capabilities:

Cogniflow offers several options for integrating AI agents into proprietary platforms:

API Integration: Expose APIs for connecting any web app to Cogniflow AI models

No-code Integrations: Pre-built connectors for platforms like Excel, Google Sheets, Zapier, Make, and Bubble

File Processing: Ability to process various file types (text, image, video, audio) via URLs or direct uploads

Marketplace and Community: Option to integrate pre-built models from Cogniflow's marketplace

Pricing:

Cogniflow offers monthly and annual pricing plans:

Free: 50 predictions/day, 5 trainings/month, 1 user, non-commercial use

Personal: $50/month ($40/month billed annually), 5,000 predictions/month, 10 trainings/month, 1 user

Professional: $250/month ($200/month billed annually), 50,000 predictions/month, 50 trainings/month, up to 5 users

Enterprise: Custom pricing, volume discounts, unlimited users, active learning, dedicated onboarding

Pricing is based on a credit system, with different models consuming varying numbers of credits. Overage pricing applies for additional usage.

What Makes Cogniflow Stand Out?

Cogniflow's standout feature is its accessibility. The platform's no-code approach and intuitive interface make AI development accessible to business users and domain experts who may not have programming skills. This democratization of AI can lead to more diverse and industry-specific AI applications, as those closest to the business problems can directly participate in creating AI solutions.

The platform's support for various data types, pre-built models, and easy integrations enables rapid development and deployment of AI solutions across a wide range of use cases. While it may have limitations for the most complex enterprise scenarios, Cogniflow offers a powerful solution for organizations looking to implement AI initiatives quickly and efficiently, especially those without extensive in-house AI expertise.

Choosing the Right Platform for Your Enterprise

Selecting the ideal AI agent platform for your enterprise requires careful consideration of several factors:

Factors to Consider:

Technical Expertise: Assess your team's AI and coding proficiency. Platforms like Cogniflow and Vertex AI Agent Builder offer no-code options, while AutoGen and LangChain might require more technical expertise.

Use Case Complexity: Consider the complexity of your intended AI applications. For multi-agent systems, AutoGen or crewAI might be more suitable, while simpler use cases might be well-served by Cogniflow.

Integration Requirements: Evaluate how well the platform integrates with your existing infrastructure and data sources. Vertex AI Agent Builder, for instance, offers robust enterprise integration capabilities.

Scalability: Consider your current needs and future growth. Ensure the platform can handle increasing workloads and expanding use cases.

Customization Needs: If you require highly specialized AI agents, platforms like LangChain or crewAI offer extensive customization options.

Security and Compliance: For industries with strict regulatory requirements, consider platforms with strong security features, like Vertex AI Agent Builder.

Budget: Factor in both initial implementation costs and ongoing operational expenses.

The Bottom Line

The rise of AI agent platforms is ushering in a new era of enterprise innovation. From AutoGen's sophisticated multi-agent systems to Cogniflow's user-friendly no-code approach, each platform we've explored offers unique capabilities to suit diverse enterprise needs. As AI continues to evolve, these platforms will play a crucial role in shaping the future of business operations, customer experiences, and decision-making processes.

The key for enterprises lies in carefully evaluating their specific requirements and choosing a platform that aligns with their technical capabilities, use cases, and long-term AI strategy. By embracing these powerful tools for building AI agents, organizations can position themselves at the forefront of innovation, gaining a competitive edge in an increasingly AI-driven business landscape.

Keep a lookout for the next edition of AI Uncovered!

Follow on Twitter, LinkedIn, and Instagram for more AI-related content.